Working around unexpected events

“Today, however successful they are, robots are still mainly standalone applications e.g. used in automated fabrication,” says Pieter Simoens, Professor at IDLab, an imec research group at Ghent University. “In that situation, they are often fixed on one spot or even caged to protect the people working in their environment. These robots take their input from their own sensors and from sensors in the environment and are able to react to a limited range of predictable events.”

But in the future, robots are bound to get more freedom to move and interact. There will e.g. be butler robots that move though buildings to assist us, autonomous vehicles and drones that find their way to deliver services and packages, or automated search and rescue actions, to name but a few.

“The more autonomy these robots get, the more they will run into unexpected events,” says Simoens. “They will move through environments that are forever changing: doors get locked that were open before, furniture has changed places, roads are partially blocked... And during their actions, they may come upon other robots which will inevitably result in conflicts.”

Free-roaming robots can only function properly if they are in continuous two-way communication with the smart environment, i.e. if they become part of the IoT. Receiving sensor information, but also signaling their own position and intention as a kind of moving, dynamic sensor. A butler robot in a smart home, e.g. will signal where it is heading so that no doors are inadvertedly closed. And the cleaning robot in that same smart home will signal where it has just scrubbed the floor, so that no butler robot slips on the wet surface.

Second, instead of stubbornly proceeding to their goal, the robots will continuously adapt their next steps, maybe taking a pause or a detour before proceeding. “We’re working on techniques where only high-level planning is done upfront,” says Pieter Simoens. “These more abstract tasks are then unpacked into more concrete tasks in the moment they have to be executed. So the concrete actions that move the robot are only planned when that action has to be taken and the immediate context is available.”

Third, robots and actuators collaborating in the same smart space will have to coordinate and negotiate on the best course of action. This can e.g. be solved by having the various robots and actuators send their plans to a common platform that will then resolve the conflicts, work out a coordinated plan, and report back to the robots.

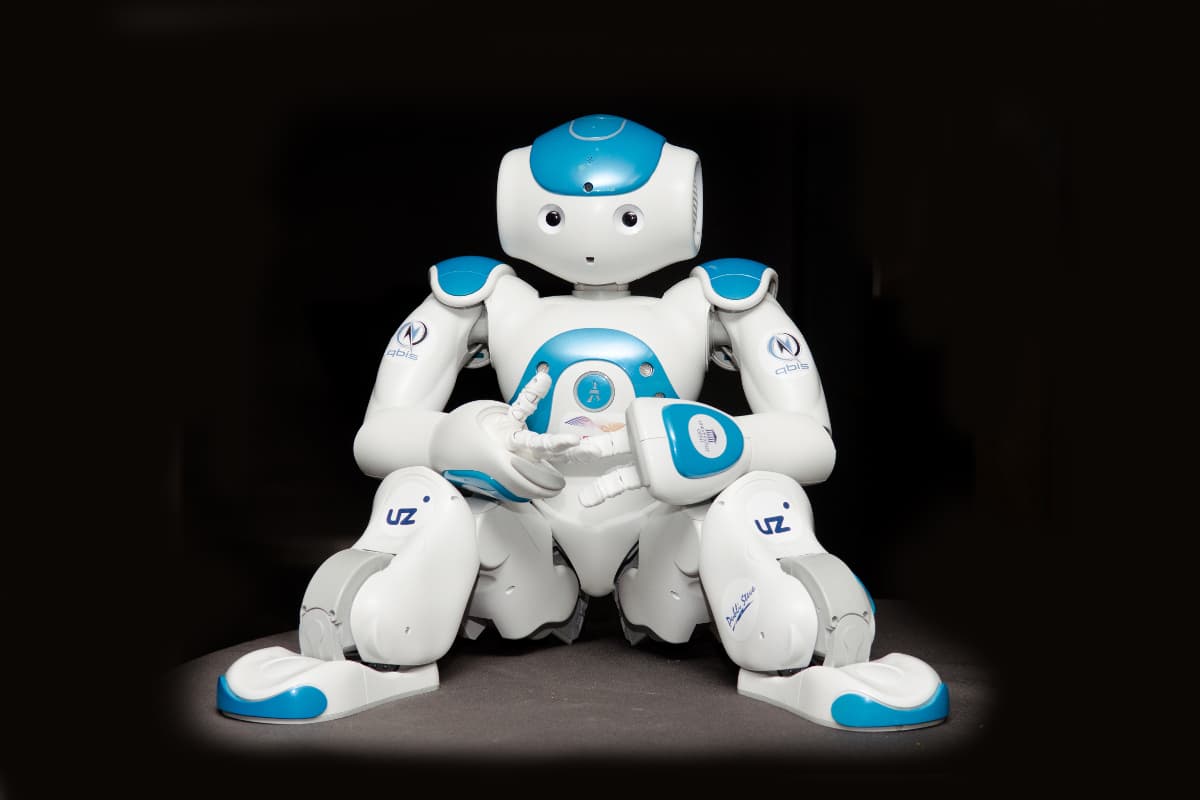

Zora, ready to provide care services for elderly residents

The human factor

“The most unpredictable factor that robots will encounter in smart spaces are people,” adds An Jacobs, sociologist at SMIT, an imec research group at VUB. “If we want to enhance our smart environment with robots that are useful and even likable, we will need to understand – much better than today – how people will want their robots, which services they want from robots, and which interaction with robots they deem appropriate.”

“To design appropriate robotic services, we can start with the existing and extensive body of work on human computer interaction. But robots bring a new dynamic in play: physical interaction and context-based intelligence. So the R&D has to be extended into a whole new territory.”

One of the projects where the imec researchers validated their research involved designing a new type of robot targeted at the production lines of the Audi factory in Brussels. Together with a number of specialized companies, they designed a ‘cobot’, a collaborative robot that can assist its human colleagues in a much more flexible way than traditional manufacturing robots do. Says Jacobs: “One challenge we looked into was how to make flexible cobots sufficiently robust for use in noisy, dynamic settings such as a car manufacturing line. Secondly, we also investigated the human side of the equation: how do machine operators want to interact with robots? And how do they expect robots to help them with their daily operations?”

One of the key issues in human-robot interaction is teaching the robot what it can and cannot do. That typically requires advanced programming skills, which is a serious bottleneck for flexible use. But in their cobot project, the imec researchers and their industrial partners came up with a new way to quickly instruct robots, an intuitive and efficient method that can be used by every machine operator, and that allows them to flexibly cope with on-the-fly and complex production changes.

“To achieve this,” continues Jacobs, “we used multimodal sensor data from heat, depth and color cameras and electrical current sensors – as well as from a newly developed cheap torque sensor. Through these, the robot gets a detailed view on the factory environment and the operators. And thanks to a facial and gesture recognition engine, it is capable of recognizing its human co-workers and interpreting the operator’s gestures and movements; input on which it can then act.”

Setting up living labs

To design their cobot, there was no way the researchers could predict all possible scenarios beforehand. So they took to using another innovative approach.

Up till now, robots were mostly developed in a very controlled and restricted environment with minimal, predictable interaction. “But as we want them to thrive in more unpredictable environments,” says Jacobs, “we also have to change the way we design and test them. That is why we are developing our robots in a living lab, a smart space that resembles the places the robots will be deployed in: including surround IoT sensor feedback and real user interaction. Such as we did in the imec.icon WONDER project.”

The goal of the WONDER project was to set up a humanoid robot for 24/7 interactive and personalized care service. Senior citizens who live in nursing homes – especially those with dementia – require constant attention. With WONDER, we wanted to see how we could provide a service to keep a constant eye on residents and intervene when necessary, using personalized feedback to calm them down. Such an additional service will complement the human touch of the caregivers, reduce the need for pharmaceutical interventions or physical restrictions, and thus improve the quality of life of the residents. The service with the robot was developed and refined with intensive assistance of real caregivers – nurses, therapists, doctors – in the setting of operating nursing home. “This enabled the technologists, caregivers, and researchers to keep refining the concept, learning additional possibilities and especially discovering the limits set by the residents.”

Since then, imec has also built a dedicated living lab: the HomeLab in Ghent, the first independent testing ground for smart home applications and services in Europe. HomeLab is a two-story house of 600m² in which researchers and users can live temporarily to test and co-create IoT and IoRT (Internet of Robotic Things) prototypes or products. With technical corridors in every room and an open home automation system, it offers tremendous flexibility to run smart applications such as robots and test their interoperability with other systems.

“One of the first projects that we’re using the HomeLab for is the imec.icon ROBO-CURE project,” says Pieter Simoens. “The goal is to develop a humanoid robot as assistant for children with newly diagnosed diabetes. During an initial stay in the hospital, the robot will serve as assistant for personalized therapy and therapy education. Examples are helping the children with their personalized food and insulin intake and teaching them how to correctly use the insulin and glucose sensor devices. The final goal is to then send the robot with the children to their home for a couple of days, as a way to prolong the help with their initial therapy. In the HomeLab, we will study how our robot can assist patients in everyday situations. Eventually, we will have people sleeping over, staying the weekend, observing possible situations, conflicts, and opportunities for potential assisted care.”

HomeLab

Want to know more?

- The imec.icon research program promote demand-driven, cooperative research. Over a period of typically two years, multidisciplinary research teams of (imec) scientists, industrial partners and/or non-profit organizations work together to develop digital solutions that find their way into the product portfolios of the participating partners. For more info, please visit this webpage or email icon@imec.be.

- More info on the three imec.icon projects that are mentioned in this text, can be found on the respective project pages of ClaXon, WONDER and ROBO-CURE.

- Audi Brussels Introduces Cobot Walt, a New Generation of Robot, in its Production Line, imec press release.

- Video on the WONDER project, showing the Zora robot helping residents of a care home.

- Video showing HomeLab, imec’s dedicated testing ground for robotic services in a smart environment

An Jacobs is a professor at the department of Communication Science of the VUB (Brussels – Belgium). She heads the unit ‘Digital Health and Work’ at SMIT, a research unit of imec at the VUB. Her research is focused on living labs, user-centered design, contributing to the fields of human robot collaboration, person-centered health management with wearables and health apps, as well as on people-centered algorithms in decision support systems in a health and industrial context.

Pieter Simoens is professor at IDLab, a research unit of imec at Ghent University. His research is focused on overcoming resource constraints of agents in distributed intelligent systems. He looks into AI techniques such as symbolic planners, deep learning and swarm intelligence to realize autonomous intelligent agent behavior. Pieter also works on mapping these algorithms on neuromorphic hardware to improve the energy efficiency and allow faster processing.

Published on:

1 March 2018