In 1950, legendary scientist Alan Turing designed a test to investigate whether a computer could ever match the level of human reasoning and communication. The concept is simple. A human jury interviews an unknown opponent to determine whether they are dealing with a human being or a computer. If a computer program is able to deceive more than thirty percent of this jury, it passes the test. In 2014, two programmers, Vladimir Veselov and Eugene Demchenko, reached the global news when their chatbot, called ‘Eugene Goostman’, had for the first time in history been able to mislead a third of the jury of the Royal Society in London.

By now, chatbots and digital assistants are fairly well established in commercial services and consumer applications. Nevertheless, there is still a lot of work to be done in the domain of Human-like AI. Steven Latré, director of IDLab, an imec research group at the University of Antwerp, gives an insight into the shortcomings and how they can be eliminated.

Alexa suggests porn to a toddler

For instance, Eugene Goostman received a lot of criticism because his inventors had deliberately chosen to program him as a thirteen-year-old Ukrainian boy. A trick that came in handy to explain a lot of the imperfections of their program and put the jury on the wrong track. The internet is also full of hilarious videos of conversations between consumers and their digital assistant. Probably the most famous is a film in which Alexa, Amazon's digital assistant, suggests a series of pornographic terms to a toddler who tries to request a song.

Many critics, often rightfully, say that machine learning still is merely an advanced form of pattern recognition.

With rather straightforward questions and answers and a logical series of conclusions between them. The challenges to escape from this Babylonian situation are to better be able to respond to subsequent questions and to also take the importance of the context into account. As a result, AI programs should become better in flexibly repsonding to new and even unknown environments and situations.

A fridge in the desert

Part one of the answer to these challenges is object recognition. For example, you can teach an AI program what a refrigerator is by showing it a large number of pictures of refrigerators and related appliances.

But then put a fridge in the desert and chances are that the AI program is not able to recognize the object correctly.

The trick is to process more contextual knowledge. For example, by making a link between different input signals and their logical relationship. Do I see something that looks like a car? Can I recognize an engine sound in the sound stream? And what do I learn from the sound of the squeaking brakes of the cyclist approaching the car? Such knowledge should help AI systems to better understand their environment and make accurate predictions. And on top of that, there is an element of 'common sense'. As human beings, we know that there is little chance that a toddler will ask for porn. And - in the unlikely event that it does - it would be better if we didn't just respond to that request.

It's what we call common sense. Something every human being has to a greater or lesser extent. And what we want to teach AI-systems as well.

Lifelong learning

Part two of the solution then lies in the ability to apply the knowledge gained in a new context. Think of industrial applications. An AI solution that has been developed for company X, has to be almost completely reprogrammed if company Y also wants to use it. Building on new datasets etc. In other words, AI programs are not yet capable of taking their experience with them to a new task.

For instance, an AI program can be very good at chess, but it is guaranteed to be defeated by humans as soon as you change one rule of the game.

As humans, we can adapt to such situations very well. When you change jobs, you're praised for the experience you've gained previously in a different context. An AI programme will therefore also have to be subject to lifelong learngin. And to become able to understand its own reasoning and make it comprehensible in a way that people can understand it as well. A relevant, but tragic, example could be the recent crashes of the Boeing 737-Max: a highly advanced aircraft with a high degree of autonomy in responding to certain situations. But which has not been able to explain to the pilot why it carried out a number of interventions based on the pre-programmed belief of keeping the aircraft in the air, while actually causing it to crash.

In what we call Human-like AI, we do not have the ambition to create systems that surpass humans in terms of intelligence or communicative skills.

What we do want is to put an end to the uncanny voice commands that you now often have to use to operate machines. And to the conversations with chatbots who invariably end up dead on phrases such as "please reformulate your question". To end up in a world in which AI systems are evolving into mature conversational agents.

Want to know more?

- Website IDLab.

- A YouTube movie of Alexa suggesting porn to a toddler.

- Linked movie on how you can cheat AI object recognition.

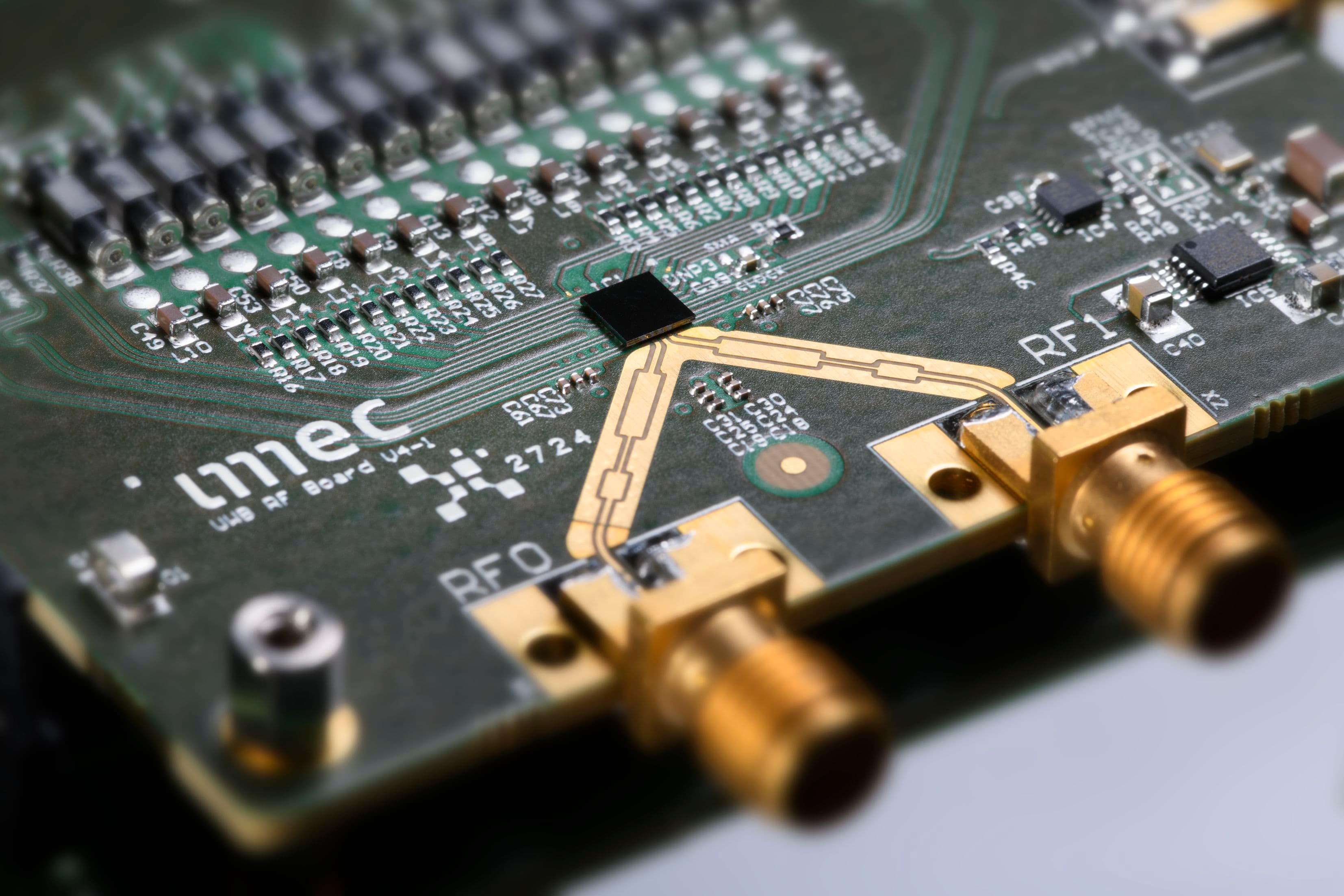

Prof. Steven Latré, is an associate professor at the University of Antwerp and director at the research centre imec, Belgium. He is leading the IDLab Antwerp research group (85+ members), which is performing applied and fundamental research in the area of communication networks and distributed intelligence. His personal research interests are in the domain of machine learning and its application to wireless network optimization.

He received a Master of Science degree in computer science from Ghent University, Belgium and a Ph.D. in Computer Science Engineering from the same university with the title “Autonomic Quality of Experience Management of Multimedia Services”. He is author or co-author of more than 100 papers published in international journals or in the proceedings of international conferences. He is the recipient of the IEEE COMSOC award for best PhD in network and service management 2012, the IEEE NOMS Young Professional award 2014 and is a member of the Young Academy Belgium.

Published on:

13 May 2019