Today, artificial intelligence often runs in the cloud, with data being sent back and forth between the application and the AI algorithms running on energy-guzzling cloud infrastructure. Easics uses its expertise in system-on-chip design to develop small, low-power and affordable AI engines that run locally, close to your sensors, e.g. inside a smart camera, sorting machine, robot or vehicle. The result is more secure, faster, and has a low and predictable latency.

Artificial intelligence is becoming the preferred solution to make many applications and production facilities smarter. With machine learning – the most successful form of AI – it becomes possible to have applications learn from actual data, being mostly sensor readings. This way, engineers no longer have to program intelligence explicitly and applications can be made smart in a much faster, cheaper, and more flexible way.

Today’s smart factories crave for self-learning engines that make fast in-line decisions, close to the applications and sensors. Think of in-line quality control, factory automation, flexible robotics, automated sorting, ... Such on-premise AI engines need to be low-latency, energy-efficient, small and cost-effective. That’s a combination of requirements that is hard to achieve with GPU-based cloud computing. What is needed instead is highly-customized yet affordable hardware with long-term availability.

To create such innovative hardware, easics is developing the next generation of its embedded AI framework that automatically generates hardware implementations of the deep neural networks that make your specific application smart. Easics maps these AI engines on semiconductor chips, using FPGA or custom ASIC technology.

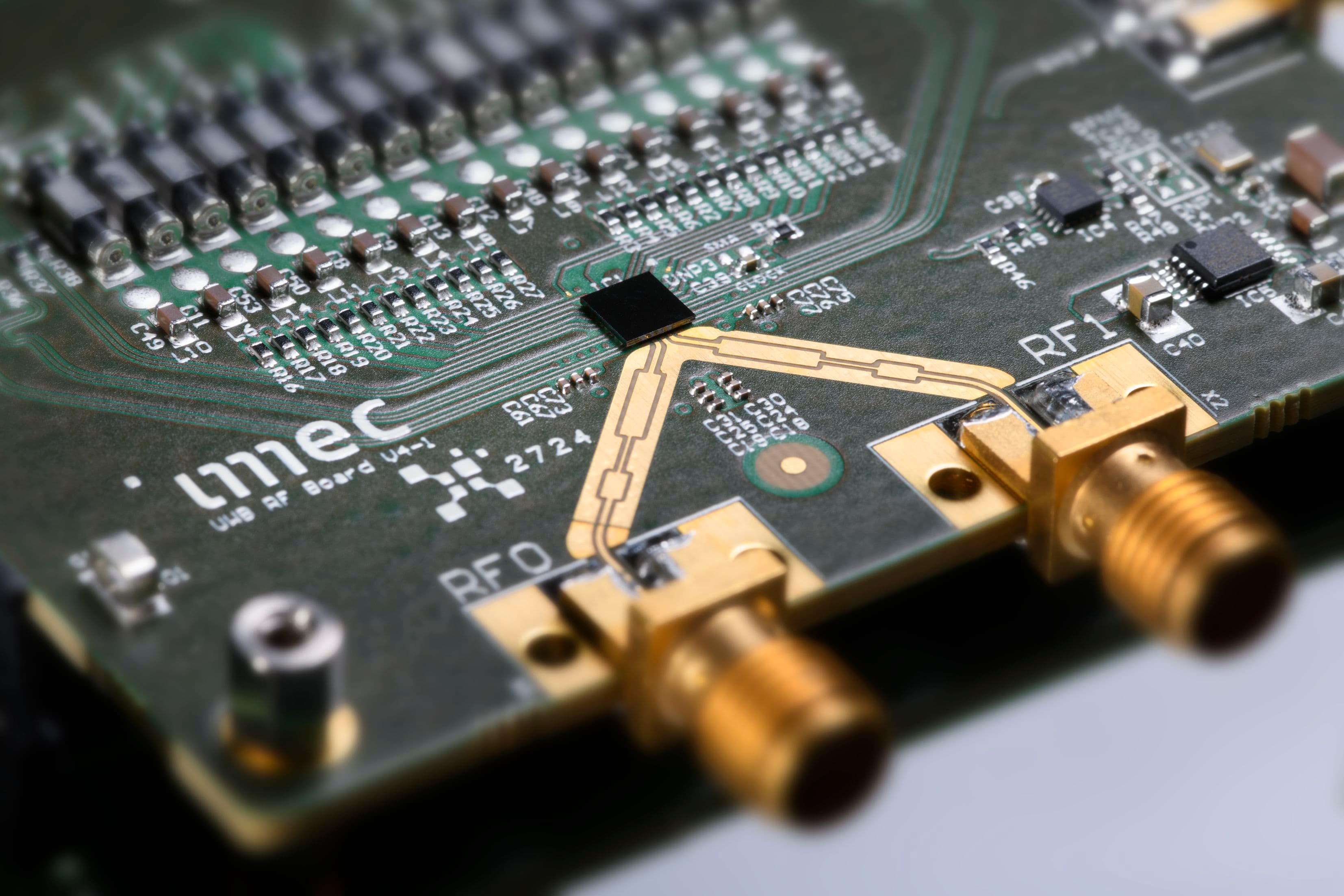

Founded in 1991 as a spin-off company of imec and KU Leuven - ESAT, easics has 28 years of experience designing first-time-right ASICs and FPGAs. Easics’ solutions are at the heart of many applications, including smartphones, high-end cameras, infrared image sensors, food sorting machines, hearing aids and earth-observation satellites.

Lately, easics has partnered in a number of imec.icon R&D projects, collaborative efforts between leading academia and industry, and co-funded by Flanders Innovation & Entrepreneurship (VLAIO). The AI solution that is demoed is partly developed in the imec.icon project HELP Video! – a project around scalable embedded video processing. Two more imec.icon projects cREAtIve and SenseCity, are currently running. Research and development of the automated framework to generate optimal AI engines both for FPGA and ASIC platforms continues in these projects.

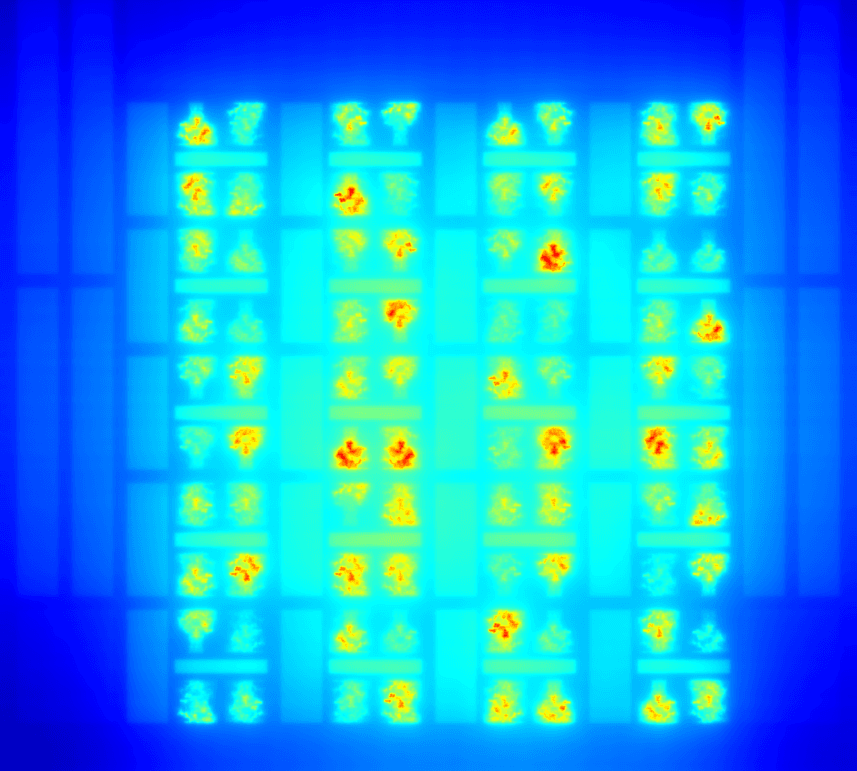

Taking its AI platform as a start, easics plans tight integrations with a number of novel and existing sensors such as image sensors capturing light inside and outside the visible spectrum (such as hyperspectral and thermal infrared), 3D scanning laser (LiDAR), Time-of-Flight (ToF) sensors, microscopy, radar, ultrasound sensors, and microphones.

Application domains that will benefit from easics’ embedded AI solutions include:

- Industry 4.0: in-line quality control, factory automation, robots, cobots, predictive maintenance

- Smart health: medical image analysis, low-power wearables and implants

- Smart mobility: driver-assistance, self-driving vehicles

- Smart city & surveillance: people, crowd and traffic monitoring

- Smart agriculture: intelligent harvesting machines

- Space 4.0: earth observation on a smaller bandwidth radio link

Want to know more?

- Discover all about the company and its solutions on the Easics website.

Published on:

13 May 2019