The need for a leap in performance

Analyzing an entire genome or protein marker analysis from a drop of blood in minutes - while reducing the cost to pennies. Fluently interacting within highly dynamic and detailed AR/VR environments. Relying on semi-autonomous, contextually aware AI personal assistants monitoring our human digital twin. Gazing into the future, the possibilities for improving our lives offered by blending high-performance computing (HPC) and artificial intelligence (AI) systems seem endless. Nevertheless, they are limited in at least one aspect: the processing capability and increasing cost of today’s compute systems. The challenge is anything but trivial: these new applications need orders of magnitude improvement in performance and energy efficiency while controlling cost.

The possibilities for improving our lives offered by blending high-performance computing (HPC) and artificial intelligence (AI) systems seem endless.

Unfortunately, we cannot build such powerful systems with the current generation of high-performance/AI hardware through traditional scaling. We cannot reach our goal by simply adding more processor cores and memory devices: the explosion in system footprint, energy consumption, and costs are no longer justifiable.

But why can’t we just scale up today’s systems as we used to? What are the fundamental hurdles? And what approach can we take to achieve non-linear gains in compute capability and energy efficiency while considering the total cost of ownership (TCO)?

The rise of system scaling walls

For over 50 years, Moore’s Law (i.e., the transistor count doubling on the same Si area for the same cost, roughly every two years) and Dennard’s Law (i.e., the power density staying constant with transistor scaling) have underpinned gradual improvements in system performance at a consistent cost. But for more than a decade now, it has been clear that the dimensional scaling inspired by these laws can no longer be used to provide the system scaling expectations of future applications. This stems from several factors we refer to as scaling walls: the dimensional, the memory/bandwidth, the power/thermal and the cost scaling walls.

The dimensional scaling inspired by Moore’s Law and Dennard’s Law can no longer be used to provide the system scaling expectations of future applications.

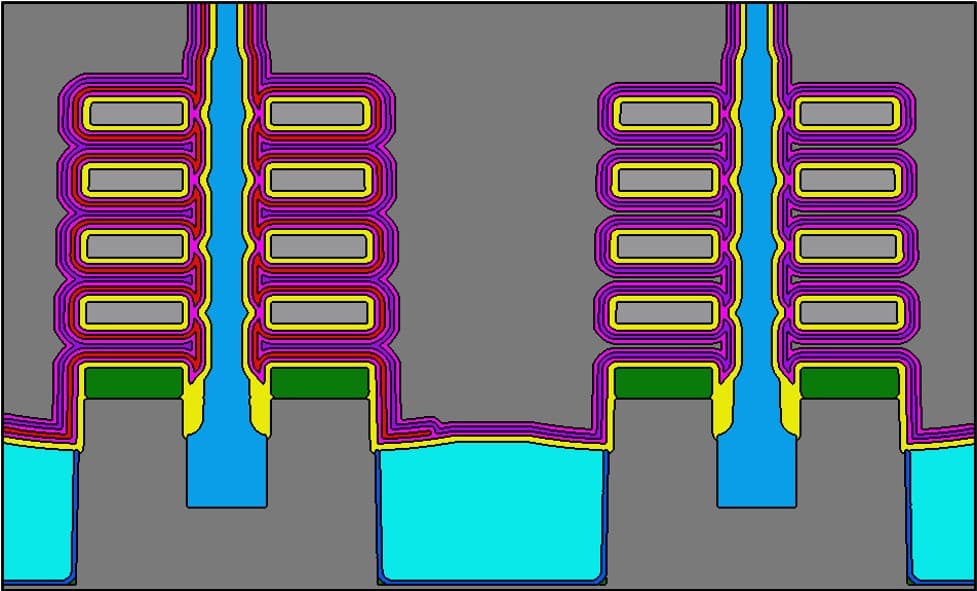

While the number of transistors in the same Si area keeps doubling about every 2 years, industry faces exceptionally high costs, speed, and power roadblocks in their complex system architectures. In traditional Von Neumann compute architectures, for example, the rise in on-chip cache memory capacity has not kept pace with the evolution in logic, and feeding data to the logic core at sufficient speed has become increasingly challenging. Besides this memory/bandwidth wall, leakage problems broke Dennard scaling, resulting in thermal dissipation issues and frequency stagnation, while the cost to manufacture the latest nodes has skyrocketed.

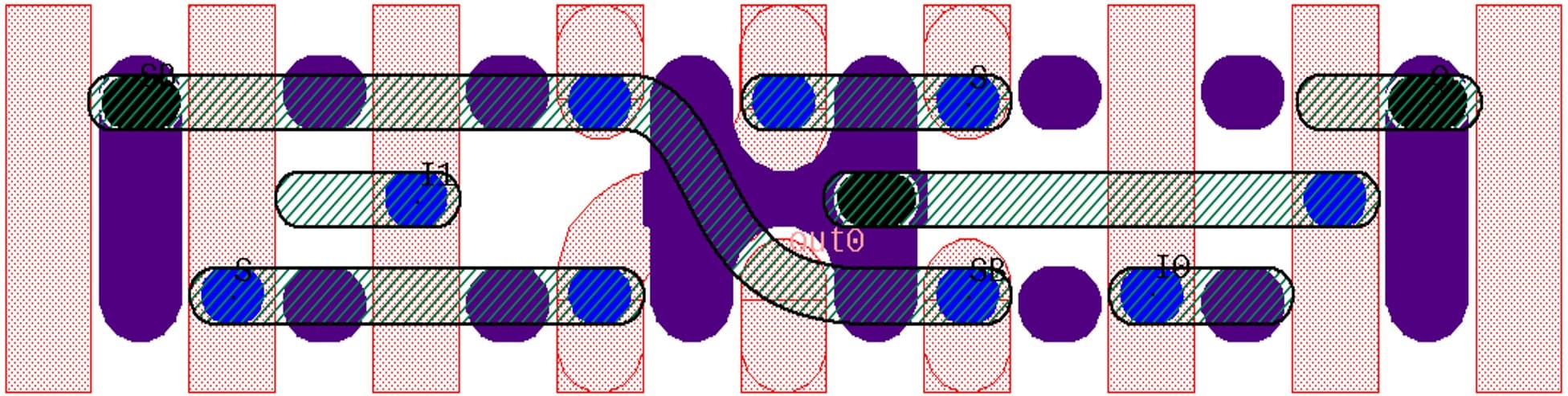

At the architecture level, complex memory hierarchies, multi-core architectures and domain-specific compute accelerators (xPUs) on a single system-on-chip have become a way to overcome these scaling walls. But even in this multi-core era, with continued scaling at advanced nodes, the performance, power, area and cost (PPAC) scaling of today’s processing units have started to saturate.

Figure 1 – Evolution of the transistor count, number of cores, power consumption, CPU frequency and on-chip cache capacity within classical Von Neumann-based processors.

Addressing the Innovator’s Dilemma in an increasingly expensive world

Add to these challenges the tremendous cost scaling wall, and we can see that the “happy scaling era” of “faster and cheaper” is over. The semiconductor community used to think narrowly of cost calculation. With every new technology generation, analyses showed a reduction in the relative cost per Si mm2 as predicted by Moore’s Law. But due to the growing complexity of semiconductor manufacturing technologies and system architectures, this no longer translates into a total cost reduction. Besides the rising direct costs for packaged silicon in new technology nodes, cooling and power consumption during the systems’ lifetime, equipment maintenance, etc. are increasingly adding to the TCO. Consequently, the performance per TCO is decreasing, and systems are getting increasingly more expensive for the same physical footprint.

The performance per total cost of ownership is decreasing, and systems are getting increasingly more expensive for the same physical footprint.

All these factors contribute to the Innovator’s Dilemma: the challenge that all companies eventually face to develop and invest in disruptive innovations alongside legacy businesses – businesses in which sustaining technologies are used that do not change the value proposition in a market – and bring them to the market. Today, the challenge is to continue driving the same tremendous growth rate we have witnessed in AI and HPC, considering the realities of performance per TCO. Improving this metric by several orders of magnitude is our main driver going forward.

Co-optimization across the system stack

We are convinced that unprecedented gains in compute can only be achieved by leveraging innovations across the entire system stack, from algorithms to core device components. Moreover, these innovations should be co-designed from the outset to ensure optimal TCO gains. These principles guide imec’s Compute System Architecture (CSA) activities.

From bottom up to top down

System-level thinking involves a fundamental belief in a top-down systems architecture approach. Traditionally, many developments have been driven by the progress in Moore’s Law scaling: new transistor architectures have paved the way to new devices and then, higher up in the system stack, to better-performing circuits, memories, and processor cores. However, using such a purely bottom-up approach limits the overall opportunity to leverage co-design across the entire system.

Figure 2 – From traditional bottom-up to town-down approaches.

A system-level approach embraces the fact that application requirements must drive solutions. We let target appliances drive innovation at the component and system level instead of solving problems with existing hardware. We are developing a mindset, framework, and methodology that enables continuous application-to-device co-design across the entire stack.

A system-level approach embraces the fact that application requirements must drive solutions.

Impactful, relevant applications will drive system development, anticipating what industry and society will need in the years to come.

An architecture-to-technology optimization loop

The methodology for developing future compute system architectures starts with understanding the requirements for the target applications and the critical underlying workloads and algorithms.

If we take the ‘fast’ analysis of a full genome as a target application, an appropriate workload could be the classification of genetic defects. Next, we consider the full system stack by envisioning what the software, compute system, and key device technology building blocks will look like for the target application: we define the innovations required at the different layers of abstraction – including algorithms, architecture modeling, performance analysis, and implementation.

Architecture modeling and analysis then give feedback on the expected performance (per TCO) at the system level and how to change our ‘trajectory’ to achieve the target performance. The benefits at the system level will be the result of the various cross-optimizations at the different abstraction layers. These optimizations will reinforce each other, ideally leading to a non-linear improvement in performance. This is the fundamentally iterative co-design loop.

The benefits at the system level will be the result of the various cross-optimizations at the different abstraction layers.

The key performance per TCO metric that comes from the model guides us toward the next step(s). These steps can range from restructuring algorithms to evaluating different system design(s) and, potentially, prototyping: developing proof of concepts for a scalable, reliable, and power-efficient architecture that can deliver the high-performance computing required for next-gen applications.

From modeling infrastructure and post-Moore compute technologies to a multi-diverse research group: an essential toolbox

Existing models are not powerful enough to model and extract performance information from novel system definitions. Therefore, we develop new scaled modeling and simulation capabilities intended to outperform current models in terms of accuracy and speed.

We develop new scaled modeling and simulation capabilities intended to outperform current models in terms of accuracy and speed.

The models themselves incorporate the characteristics of technology building blocks: novel technological capabilities that will intercept system scaling challenges – from packaging to compute elements to software innovation. To validate the outcome of our models, we desire to build prototypes of critical technology building blocks at all levels of system development. Technologies include not only hardware elements but also: algorithms, middleware, programming models, and networking stacks, all the way up to the layer where developers write software and users interact with a device.

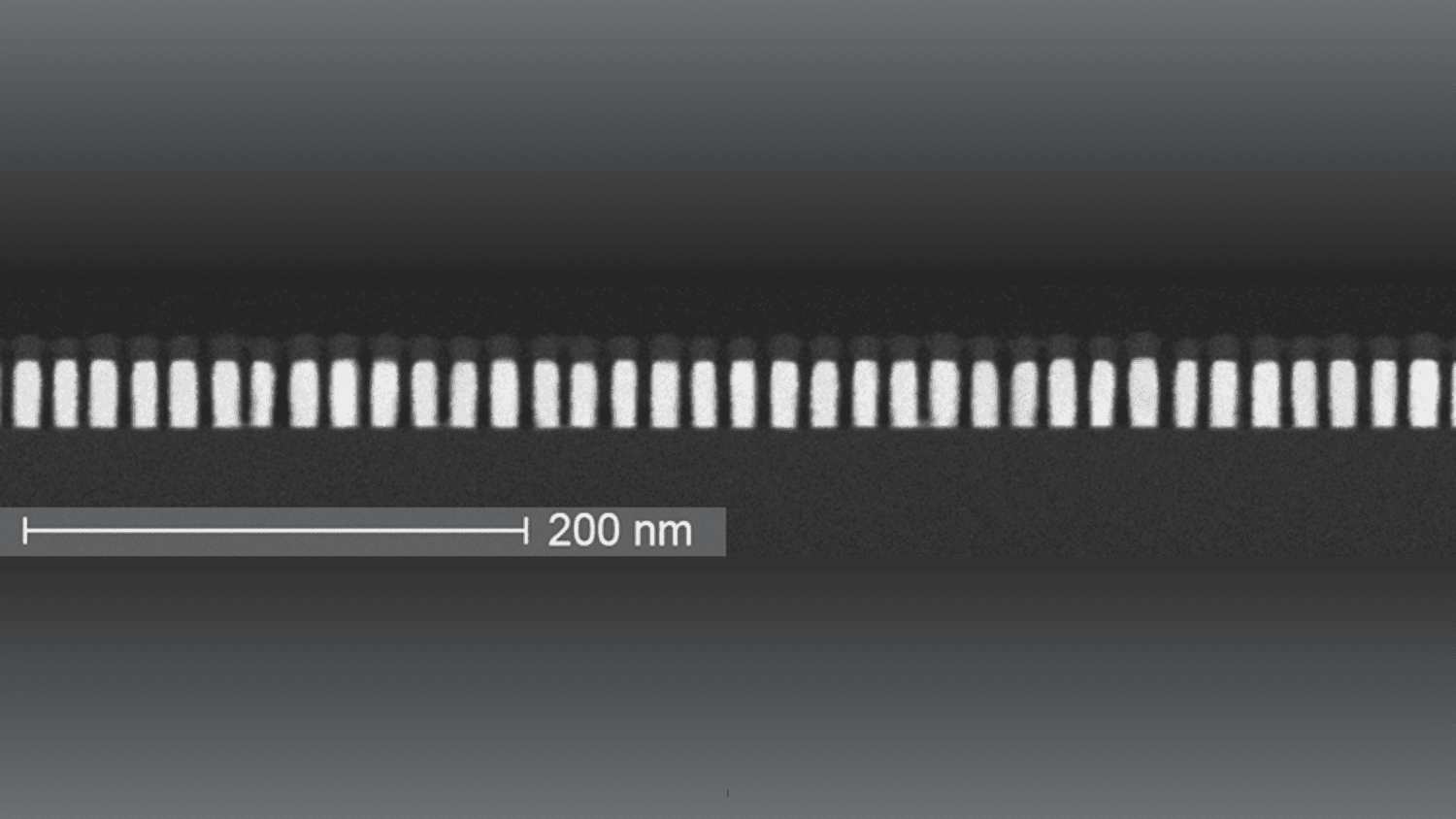

On the fundamental technology side, we look at existing Si-based technologies (such as advanced optical I/O and 3D technologies) and explore emerging AI algorithms and post-Moore compute alternatives. These include quantum computing, optical-computing paradigms, and superconducting digital computing – promising unprecedented improvements in power efficiency, computational density, and interconnect bandwidth.

Our team is our greatest asset in bringing this mission to a successful conclusion.

The modeling and technology challenges are non-trivial. But, without doubt, our team is our greatest asset in bringing this mission to a successful conclusion: a diverse, multi-disciplinary group with different backgrounds in algorithms research, software enablement, compilers and middleware for heterogeneous systems, microarchitecture, and circuit design. People who can and want to think out of the box to bring new, meaningful, and impactful ideas.

This article was originally published in EETimes Europe.

Want to know more?

Watch the movie on imec’s compute system architecture activities.

Arindam Mallik leads the Future System Exploration (FuSE) group in the Compute System Architecture (CSA) R&D unit. He received M.S. and PhD degrees in Electrical Engineering and Computer Science from Northwestern University, USA in 2004 and 2008, respectively. Arindam is a technologist with 20 years of experience in semiconductor research. He has authored or co-authored more than 100 papers in international journals, conference proceedings, and holds number of international patents. His research interests include novel computing system, design-technology co-optimization, economics of semiconductor scaling

Boris Leekens is a project manager in the CSA team. Before joining imec in 2011, he held several positions in Alcatel-Lucent and tbp Electronics where he led the Test Engineering team and several projects for the manufacturing industrialization of xDSL. In his current position at imec, he brings system knowledge and structured product design knowledge to the CSA team.

Eric Mejdrich is Vice President of Research and Development at imec. He has held a variety of roles in his career, ranging from technical (architecture to implementation) to leadership positions, in both large companies and start-ups. His primary technical focus has been in systems architecture, both microprocessors and heterogeneous computer systems, from Blue Gene to HoloLens.

Published on:

14 November 2022