HARrad

Introduction

Many smart home applications rely on indoor human activity recognition. This challenge is currently primarily tackled by employing video camera sensors. However, the use of such sensors is characterized by fundamental technical deficiencies in an indoor environment, often also resulting in a breach of privacy. In contrast, a radar sensor resolves most of these flaws and maintains privacy in particular.

We investigated a novel approach towards automatic indoor human activity recognition, feeding high-dimensional radar and video camera sensor data into several deep neural networks. Furthermore, we explored the efficacy of sensor fusion to provide a solution in less than ideal circumstances. To that end, we constructed and publish two data sets that consist of 2347 and 1505 samples distributed over six different types of gestures and events, respectively.[1] As described in [1], we employ six different deep neural networks to classify different activities based on a radar and video camera sensor. A complete overview of this study can be found in our published paper at:

https://link.springer.com/article/10.1007/s00521-019-04408-1.

The code linked to this paper can be found at https://github.com/baptist/harrad. More information on our team can be found at http://www.idlab.ugent.be and http://www.sumo.intec.ugent.be/.

In case you find the data set useful, please cite the accompanying paper [1].

[1] Vandersmissen, Baptist, et al. "Indoor human activity recognition using high-dimensional sensors and deep neural networks." Neural Computing and Applications (2019): 1-15.

Summary

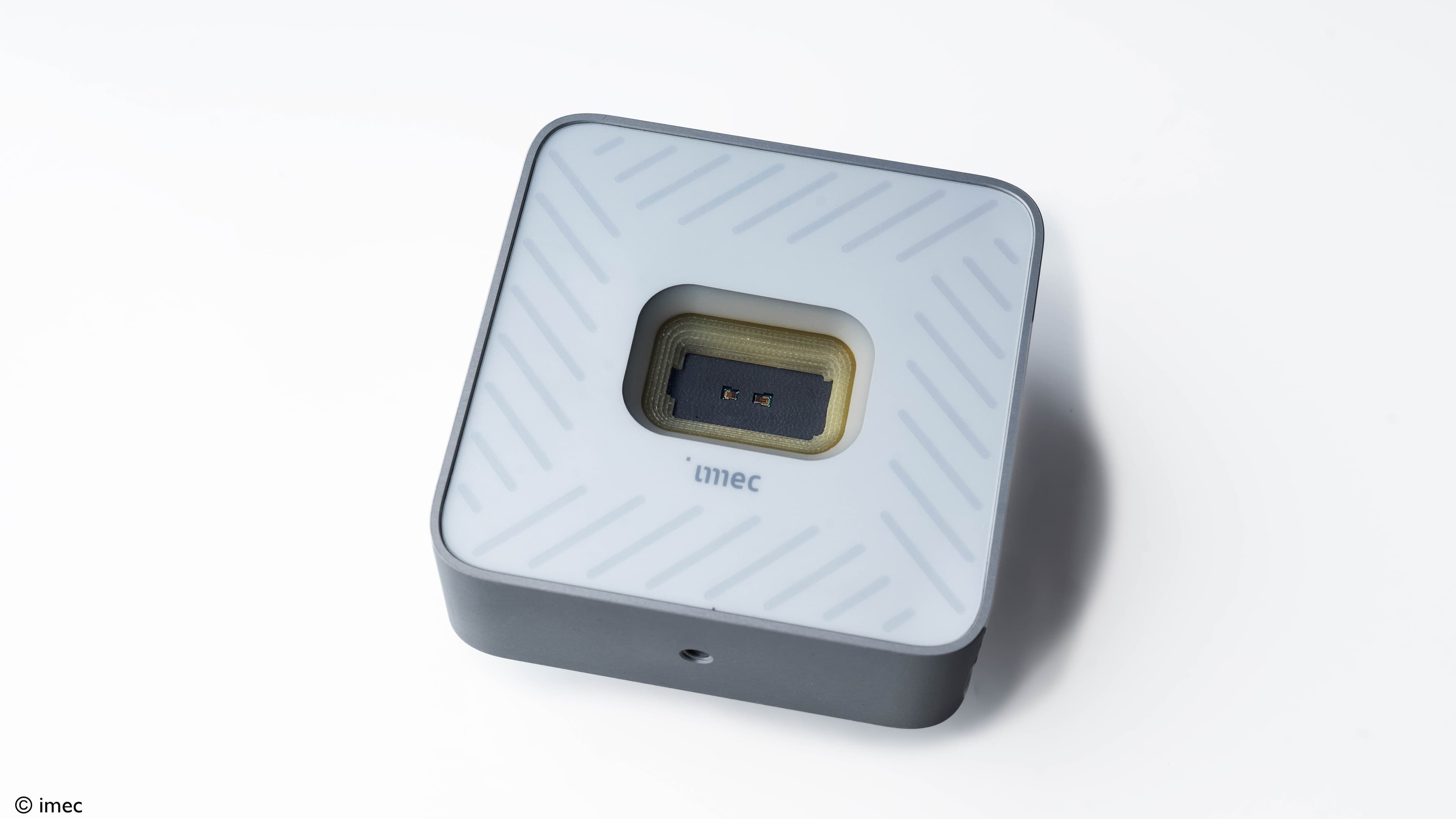

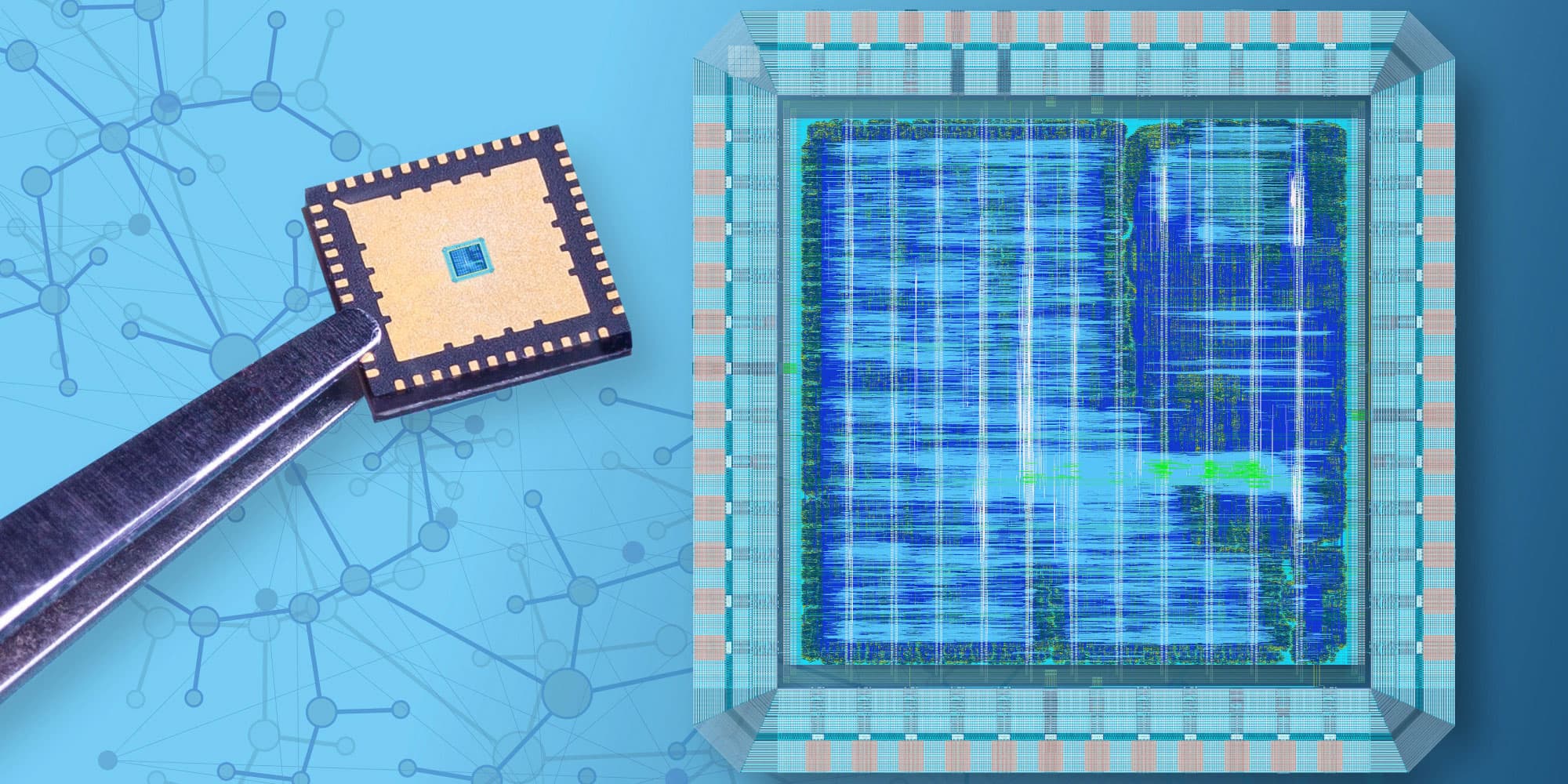

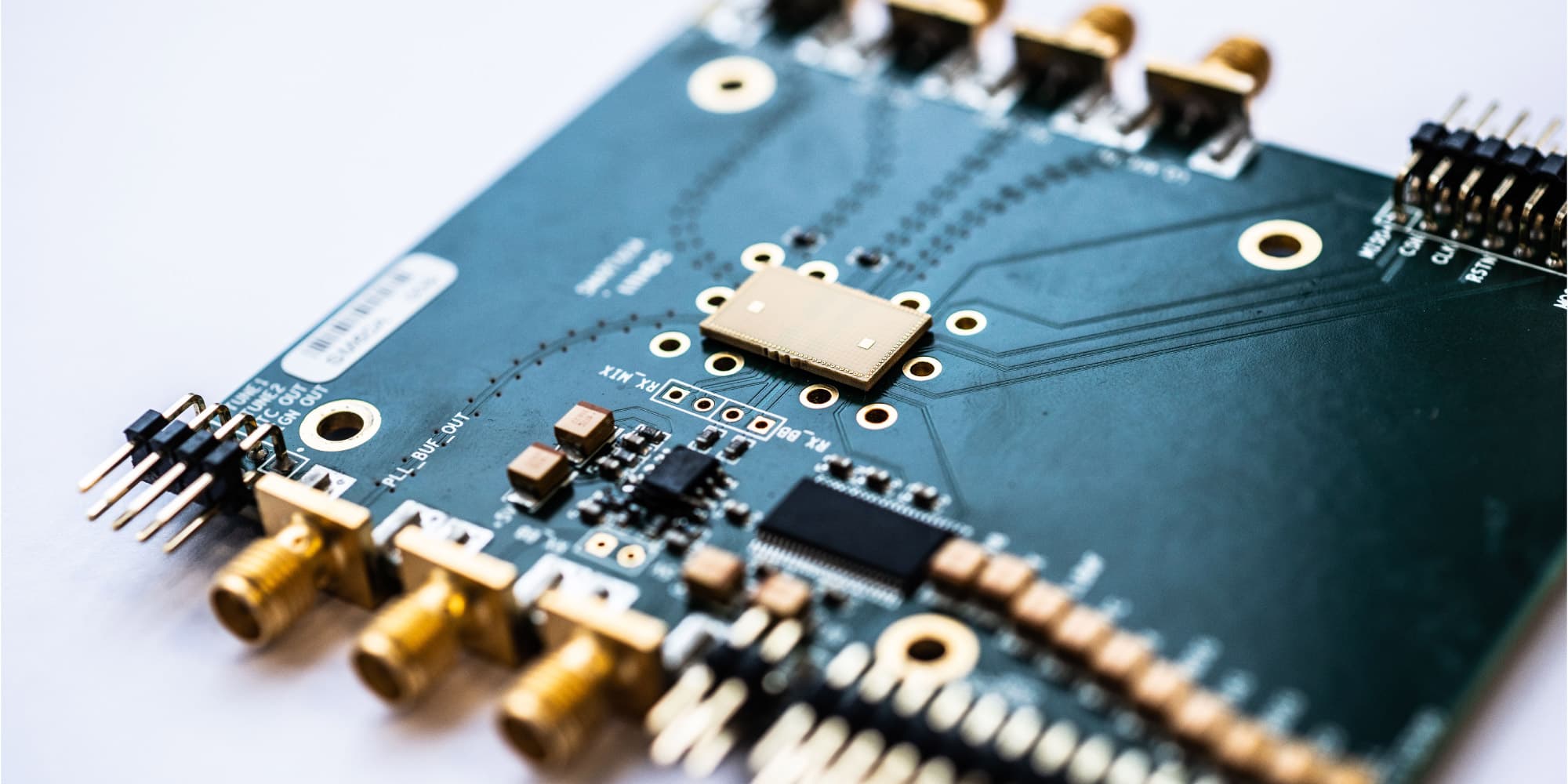

To facilitate further research, we developed and release the Human Activity Recognition with a Radar (HARrad) data set. HARrad was retrieved using a Frequency Modulated Continuous Wave (FMCW) radar with a center frequency of 77GHz. In total the data set consists of 37 h5py files containing the raw FMCW radar data.

Table 1 lists the details per recording subject. In total the data sets contain 3852 activities, taking on average 2.56 s per activity, subdivided in 1505 event-related activities and 2347 gesture-related activities. Our data sets thus contain a total of 4.32 hours of effectively annotated activity data distributed over 12 classes.

Table 1: Details of radar files per subject.

|

Subject |

# Files |

# Frames |

Time |

# Samples |

|

S1 |

4 |

25244 |

28 m |

381 |

|

S2 |

11 |

64017 |

71 m |

995 |

|

S3 |

4 |

25244 |

28 m |

375 |

|

S4 |

4 |

25244 |

28 m |

433 |

|

S5 |

4 |

25243 |

28 m |

428 |

|

S6 |

4 |

25246 |

28 m |

406 |

|

S7 |

4 |

25244 |

28 m |

511 |

|

S8 |

1 |

9017 |

10 m |

141 |

|

S9 |

1 |

9016 |

10 m |

182 |

The labeling of the data is provided in the activities.csv file. More precisely, this file contains the description of each activity sample (per line) in the form of:

<filename>,<start index>,<stop index>,<activity class>,<subject>

Furthermore. We also provide the random stratified splits (RS) used in the paper [1]. In Table 2, we listed the number of samples per activity class and Table 3 shows a more detailed distribution of all samples per subject and activity class.

Table 2: The following table lists the number of samples and the corresponding average duration of each activity for both data sets.

|

|

Gestures |

||

|

Abbr. |

Activity |

Total |

Avg. duration |

|

D |

Drumming |

390 |

2.92s (± 0.94) |

|

S |

Shaking |

360 |

3.03s (± 0.97) |

|

Sl |

Swiping Left |

436 |

1.60s (± 0.27) |

|

Sr |

Swiping Right |

384 |

1.71s (± 0.31) |

|

Tu |

Thumb Up |

409 |

1.85s (± 0.37) |

|

Td |

Thumb Down |

368 |

2.06s (± 0.42) |

|

|

Events |

||

|

|

Activity |

Total |

Avg. duration |

|

E |

Entering Room |

221 |

3.01s (± 0.73) |

|

L |

Leaving Room |

224 |

3.94s (± 0.78) |

|

Sd |

Sitting Down |

342 |

1.98s (± 0.31) |

|

Su |

Standing Up |

344 |

1.65s (± 0.28) |

|

C |

Clothe |

195 |

5.62s (± 1.76) |

|

U |

Unclothe |

179 |

4.97s (± 1.09) |

Table 3: Number of recorded events and gestures per subject Si , with i ∈ {1 . . . 9}.

|

|

Gestures |

|

Events |

||||||||||

|

|

D |

S |

Sl |

Sr |

Tu |

Td |

|

E |

L |

Sd |

Su |

C |

U |

|

S1 |

49 |

40 |

44 |

22 |

41 |

37 |

|

20 |

20 |

29 |

27 |

30 |

22 |

|

S2 |

89 |

80 |

99 |

92 |

83 |

80 |

|

78 |

79 |

83 |

88 |

73 |

71 |

|

S3 |

44 |

43 |

48 |

46 |

35 |

38 |

|

14 |

14 |

33 |

33 |

13 |

14 |

|

S4 |

33 |

34 |

35 |

35 |

32 |

32 |

|

32 |

33 |

51 |

50 |

33 |

33 |

|

S5 |

40 |

40 |

45 |

46 |

52 |

47 |

|

22 |

22 |

43 |

43 |

14 |

14 |

|

S6 |

45 |

45 |

46 |

47 |

58 |

52 |

|

15 |

16 |

32 |

34 |

8 |

8 |

|

S7 |

46 |

40 |

72 |

62 |

72 |

47 |

|

28 |

28 |

45 |

42 |

17 |

12 |

|

S8 |

17 |

15 |

20 |

23 |

17 |

19 |

|

5 |

5 |

6 |

6 |

5 |

3 |

|

S9 |

27 |

23 |

27 |

11 |

19 |

16 |

|

7 |

7 |

20 |

21 |

2 |

2 |

[1] The release of the video data is privacy-sensitive and we thus currently only provide the radar data.

Download

This content is only visible on the desktop version of this website.