The end of the 20th century was the era when the keyboard and mouse increasingly had to tolerate the competition of touchscreens. We are now in 2019 and the development of how we interact with products (UX-design) is a recognized profession. More and more products can be operated in innovative ways, e.g. with our voice or even our brains. But in the vision of Xavier Rottenberg, scientific director and group leader wave-based sensors and actuators at imec, all this technology will already be outdated by the middle of the 21st century. By then, we will only talk about a bi-directional human-environment interface: a symbiotic relationship between people and the environment, supported by largely hidden technology. But what are we going to use it for? And above all: how are we going to make it happen?

Visit from Mars

In 2031, the Mars One project plans to permanently house its first crew on the red planet. But even when traveling up and down to Mars becomes feasible, a single journey will take six to eight months. So Mars travelers will be separated from home for a few years. That may pose some problems, because anyone who has been on a (business) trip for a shorter or longer period of time knows that the lack of homely warmth cannot yet be compensated for by apparent quality time on FaceTime or Skype.

But let’s not despair. By 2035, the technology for telepresence - the presence from a distance – will be so realistic that it can no longer be distinguished from reality. We will 'beam me up Scotty' not with our physical self, but with our avatar or virtual twin who can travel anywhere and anytime to compensate for our absence. Which means you can be 225 million kilometers from home and still be in time to give a good night kiss to your children. And for the observant reader: of course we will have come up with an argument why we should go to Mars ourselves and not our avatar... "We choose to go the Moon!", remember?

Excite all our senses with only light and sound

In fact, telepresence is not that difficult on paper. You only need two basic technologies: photonics (light) and phononics (sound). Or, let us call them advanced optical and acoustic systems. Because they are, of course, far from simple.

Both are based on waves but rely on very different physical processes: acoustic technology is based on the mechanical vibration of matter (solid, liquid or gasous) and deals with phonons, while optical technology is based on electromagnetic field oscillations and deals with photons. With these two technologies or a clever combination, we envision that we will be able to read and influence all the senses.

A virtual presence will then be nothing more than a complex interplay between advanced projections and collections of sound and light waves.

With non-invasive optical and acoustic technology, we will be able to read and stimulate all our senses and thus build a bi-directional interface with our environment.

Experience touch without anyone touching you

The basic technology for much of this already exists. Through manipulation of light waves, we can already create holograms. Although there are still many challenges for further developing the technology, it already proves its worth not only in science fiction movies but also in quite useful applications such as on-stage presentations or performances. And also the manipulation of sound waves is far from new. Experiments with directional or parametric loudspeakers that only send the sound in a specific direction already date back to the sixties.

For several reasons, the technology in these domains will undergo a fascinating development in the coming years.

Probably the most important stimulus is the advanced miniaturization of optical and acoustic building blocks. Lenses, mirrors, microphones, loudspeakers... can now be integrated with transistors on a micro-to-nanometer scales. This opens the door to complex systems that are extremely small and energy-efficient. Picture a television screen that not only has pixels for light, but also for sound (in the form of miniaturized speakers that can be addressed individually). With such a screen, several people could e.g. watch the same movie and each hear the sound track in their own language.

But it is possible to influence much more than just hearing through microphones and speakers. Consider the research in which imec uses acoustic pressure sensors for motion recognition. Or vice versa: ultrasonic waves being used to stimulate a person's tactile sensors from a distance. With these, you would experience a touch or vibration without anyone actually touching you.

A second reason for the expected developments is the potential to combine optical and acoustic technology. For example in micro-optomechanical sensors (MOMS): devices where an acoustic pressure sensor can be read out by an optical wave. Because these optical signals have a much larger bandwidth than the channels in a classic electrical readout, it is possible to do massive multiplexing. This allows thinking on a completely different scale. For example, imec has the vision of a surgical glove with several thousands of integrated sensors that enable tissue analysis during a surgical procedure and without external imaging equipment.

Or back to the Mars story and telepresence: if you combine advanced holography with the possibility to stimulate the hearing and the sense of touch through sound waves, you will come very close to a very realistic virtual presence.

Bi-directional human-environment interface

These examples only illustrate the tip of the iceberg. Vision, hearing and touch are just some of our senses where photonics and phononics can focus on.

At least as exciting are the developments in reading and influencing the activity in our brains.

With a combination of light and sound technology, it is already possible to measure brain activity in a non-invasive manner and without contrast fluid, based on the oxygen saturation in certain areas of the brain. A step further is to do the reverse and, for example, optimize a person's sleep by sending out directional waves. In an ultimate scenario, this can be done without any form of wearable, when a bi-directional human-environment interface is created: a symbiotic interplay between men and their high-tech environment. With the human well-being at the center.

The tricorder and glasses to look through materials

Before we get there, we still have a lot of interesting derivative products in the pipeline. For example, recent developments in the optical field open the door for Raman spectroscopy using a portable device. Raman spectroscopy is a technique for determining chemical material properties with non-harmful light waves. An example application would be monitoring ripening fruit. Or skin analysis for the diagnosis of melanoma or for monitoring dehydration. Existing devices are still bulky and complex. Once portable, they become a kind of tricorder: the childhood dream of every doctor and Star-Trek fan.

And while we are dreaming: thanks to the technological developments in the Terahertz spectrum, it will become possible to make cameras that can look through certain materials, such as paper or clothing. Once integrated in a pair of glasses or contact lenses, the James-Bond fans are also being served.

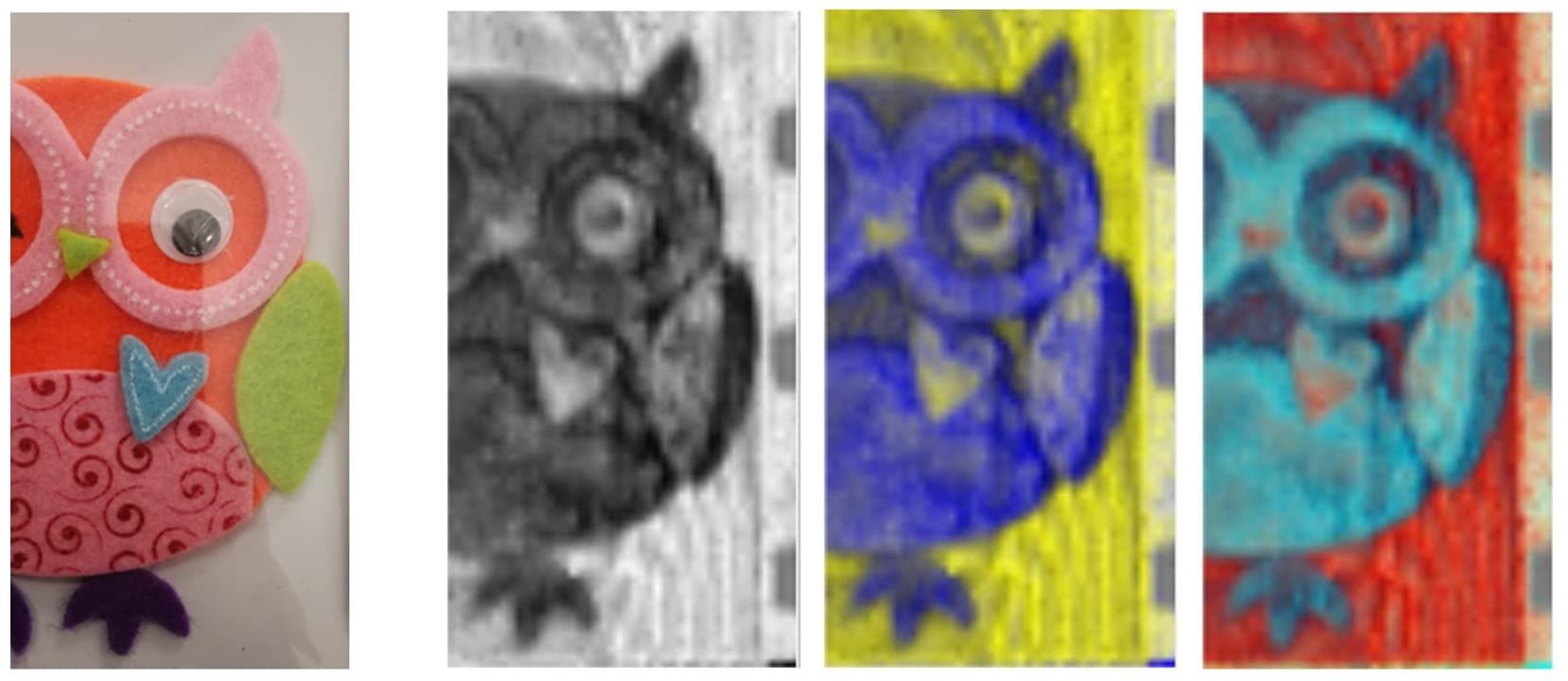

Terahertz technology enables to look through certain materials. The original object is shown on the left. On the right are three images taken with a THz camera after the object has been covered with a sheet of paper.

To boldly go where no man has gone before

Let it be clear that there is still much to be discovered. Which only makes it more interesting for researchers. To give just one example of what will certainly appeal to the experts: recently the acoustic equivalent of the (optical) surface plasmon polariton (SPP) has been discovered. For non-technicians: this is a previously unknown state of an optical wave. Extremely simplified you could say that it is as if a new condition would have been discovered in materials besides the known solid, gas, liquid and plasma. But then for optical waves. After the SPP was discovered in the optical domain, it opened, among other things, the ability to drastically improve the resolution of imaging techniques. And now the acoustic equivalent has been discovered. On top of all other developments, new techniques and materials, this gives sufficient food to explore unknown horizons up to 2035 and beyond.

How is imec contributing to this future?

Imec focuses on the development of photonics and phononics. For example, in 2016 it opened a branch in Florida that specializes in vision systems beyond the visible light spectrum. And in 2018, together with UGent, imec started a spin-off for medical applications of photonics on chip.

Want to know more?

- Browse further in this magazine and read 'AR glasses have the potential to replace the smartphone within 10-15 years from now', which also talks about the interplay between the real and virtual world.

- Overview page of imec expertise on image sensors and vision systems.

- ‘Photonics-on-chip makes doctors dream’: an item from the March 2017 imec magazine.

This article is part of a special edition of imec magazine. To celebrate imec's 35th anniversary, we try to envisage how technology will have transformed our society in 2035.

Xavier Rottenberg is scientific director and group leader wave-based sensors and actuators at imec. He received the MSc degree in Physics Engineering and a supplementary degree in Theoretical Physics in 1998 and 1999 from "Université Libre de Bruxelles", Belgium. He received further his PhD degree in Electrical Engineering in 2008 from KU Leuven, Belgium. He worked one year at the Royal Meteorological Institute of Belgium in the field of remote sensing from space and joined imec in 2000.

Published on:

9 January 2019