Augmented reality (AR) glasses are expected to become the next big thing in infotainment. They will allow us to overlay virtual objects on top of our regular environment, and experience these objects as if they were real. AR glasses will enable exciting new applications, and will dramatically impact the way we interact and communicate with each other. But users will only accept them if they are unobtrusive and ‘working perfectly’. And this drives today’s technology development. Soeren Steudel, principal scientist at imec, discusses the trends in infotainment, how they translate into technology and how they might impact our lives in 2035...

It is the year 2035... Three designers collaborating on an art project have gathered in the gallery where they will soon release their newest creation. They all wear stylish augmented reality (AR) glasses allowing them to virtually overlay the latest mock-up of their art object on the real gallery environment. They can even touch the virtual object, manipulate it and change its shape with their hands. All three of them can evaluate – in real time – the impact of the manipulations from their point of view. When they eventually agree on the object’s final shape and color, they send their creation to a 3D printer to have a first prototype of their artwork.

Towards increased natural human experience

A scenario like this builds on the big trends that we see in infotainment and on how those translate into new technologies. Infotainment can be described as the way we interact with electronics on a personal level, in numerous domains such as industry, healthcare, entertainment, education, communication etc. Today, dominant designs in infotainment systems are for example digital cinema, the (connected) tv, ‘Alexa’ audio home control systems, the pc, gaming consoles and smartphones. In this sequence, they answer an ongoing trend towards ever more mobility, and hence more energy constraints. But they all have one thing in common: they interface with human beings using only a limited number of human senses – being sight, hearing and, to a limited extent, touch.

Trends in infotainment – an imec view

In the future, this number of human senses will definitely increase. Future infotainment systems will allow us to experience the virtual world as if it were the real world. Designed to be unobtrusive, the electronics will allow us to interact intuitively with virtual objects – thereby gradually using all our senses, including smell, taste, proprioception (the sense through which we perceive the movement and position of our body) and sense of balance. And this drives the development of the next-generation infotainment platforms. As a first step, we have already witnessed the emergence of virtual reality (VR), where fully closed headsets take us into a fully simulated world. Virtual reality is however merely confined to niche activities such as gaming.

Overlaying virtual objects

The next big thing will be AR glasses, allowing us to enhance our perception of the real world. AR glasses will overlay contextual information and/or virtual objects on top of the real world. The system will interface with human beings in an unobtrusive way, using senses such as sight, hearing, touch, smell and taste. AR glasses will create tremendous possibilities in areas such as industry (e.g. to assist in product design), art, entertainment, and healthcare (e.g. to assist the surgeon during surgery), and in the way we communicate and collaborate with each other.

We can expect that within 10-15 years, this vision will become reality. First AR glasses are already emerging. In the following years, their performance specifications will be gradually improved to become true AR products. They have the potential to become a new platform competing against the mobile phone, at some point even replacing it. But users will only accept the new technology if all technology challenges will have been solved and the product is ‘working perfectly’. And if AR glasses will have evolved from the bulky goggles – as in today’s preliminary applications – to light weight and stylish implementations.

From sensors and actuators to self-learning systems

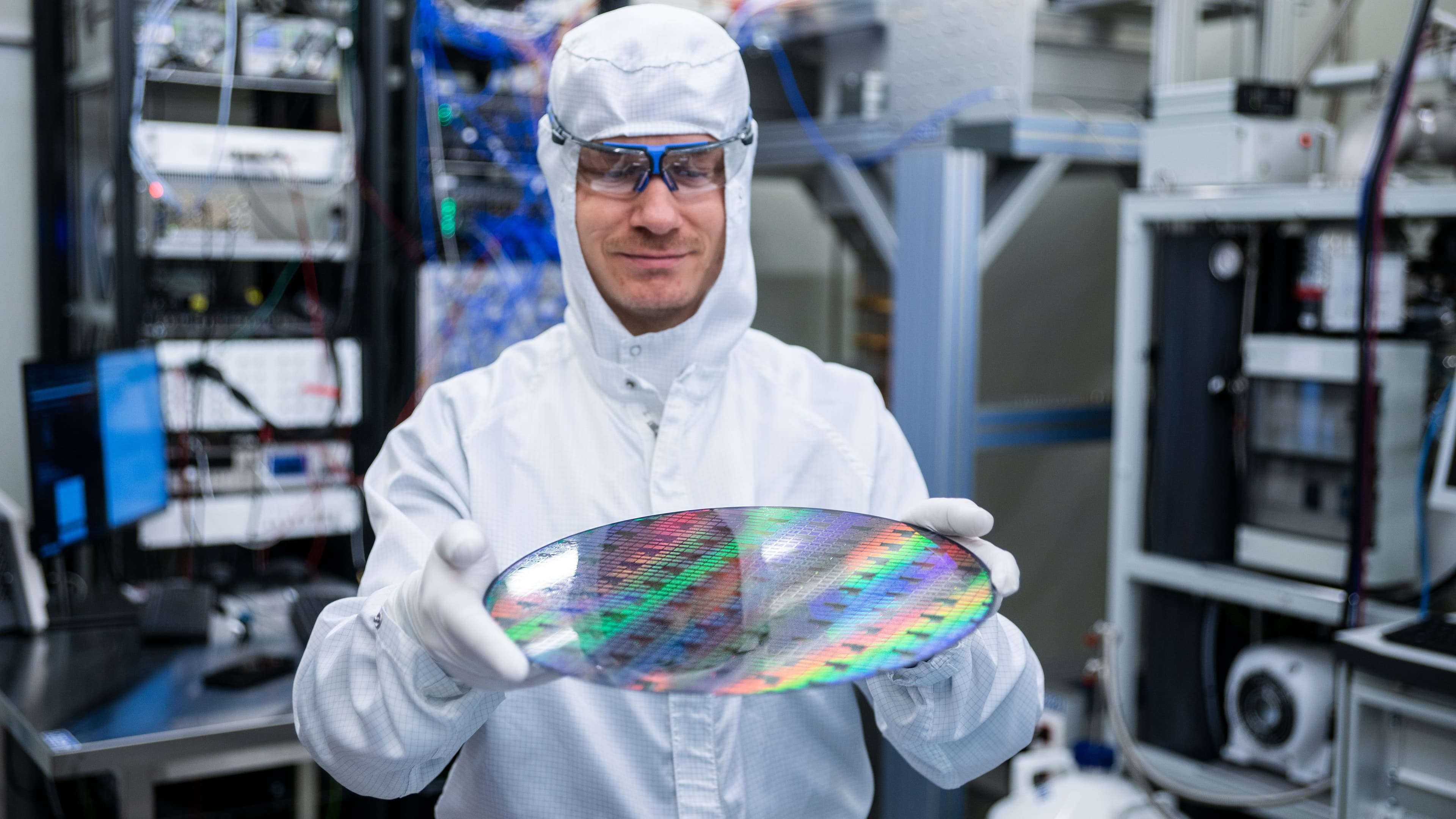

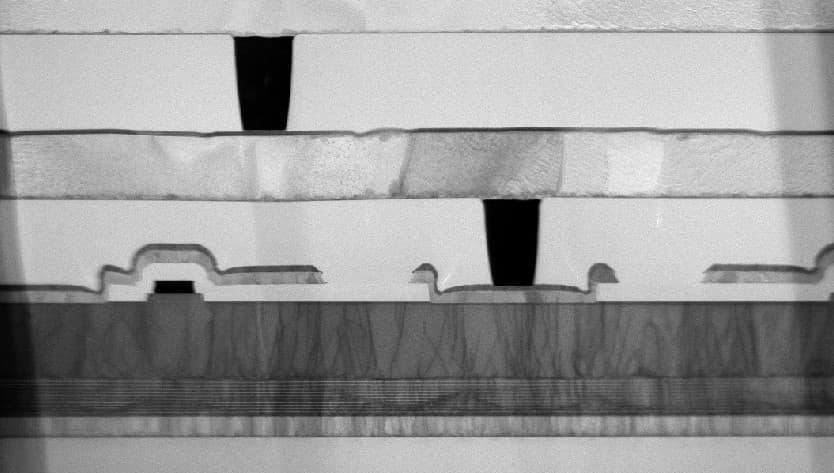

To trigger all human senses, we need innovations in low-power sensors and actuators. Finding solutions for senses such as sight and hearing seems relatively simple: an actuator for sight is a display, a sensor is a camera; for hearing, we can make use of loudspeakers and microphones. At imec, for example, we are scaling down our micro-OLED platform to enable very high resolution displays. We also work on eye-tracking technology that will enable an enhanced AR experience. And we work on haptic feedback solutions to enable touch and create push backs in 3D. Developing actuators for senses as taste, smell, proprioception (i.e., how can you manipulate your feeling of strain?), and sense of balance remains however a huge challenge. One future option is to use directed ultrasound to stimulate nerves in our brain or vertebrae, or to trigger these senses by directed implants.

Imec develops eye-tracking solutions to improve AR and VR experiences.

We will need innovations both in hardware and software. The ability of AR systems to provide 3D visual information overlay will require low-power image recognition and data extraction solutions, at the lowest possible latency. With these solutions, AR glasses will for example be able to quickly visualize where you can find a bakery in the street you are walking in, and to display the types of bread that are available in the shop.

This will drive computation requirements and data rates far beyond what can be achieved today. To give an example, 3D high-resolution video image overlay may require data rates of about 1TB/s – 10TB/s. We will also need sensor fusion and machine learning tools, both on the glasses and in the cloud.

To minimize information overload for the user, we need self-learning systems that know which information is relevant for their user and what is not.

And at all levels of technology development, a dramatic increase in power efficiency will be required to guarantee a long lasting battery autonomy. Last but not least, the user will only accept this new technology if AR glasses can be made light weight, stylish, unobtrusive and comfortable, and provide a natural image to the eye.

Beyond AR glasses...

Looking further into the future, say 15-25 years from now, we will gradually move towards mobile holographic projection. With holo projection, everyone in the room will be able to visually experience 3D virtual objects, but without wearing glasses. These holographic projectors might be complemented with directed sound projection to actuate hearing, and with haptic feedback solutions to trigger touch.

And far beyond 2035, the next wave might be direct brain-to-computer interfaces.

Human senses will be triggered by directly stimulating certain areas of the brain. In a first phase, this could be done by non-invasive technologies such as EEG systems or ultrasound stimulation. In a next phase, we could think of brain implants. People already work hard to realize this vision, referring to e.g. Elon Musk’s company Neuralink. Without any doubt, brain-to-computer interfacing will create endless possibilities and useful applications, for example in a medical or educational context. But let’s leave it aside if people would welcome such a technology in their everyday life...

How is imec contributing to this future?

Imec is actively contributing to this future vision with the development of a broad range of technology building blocks.

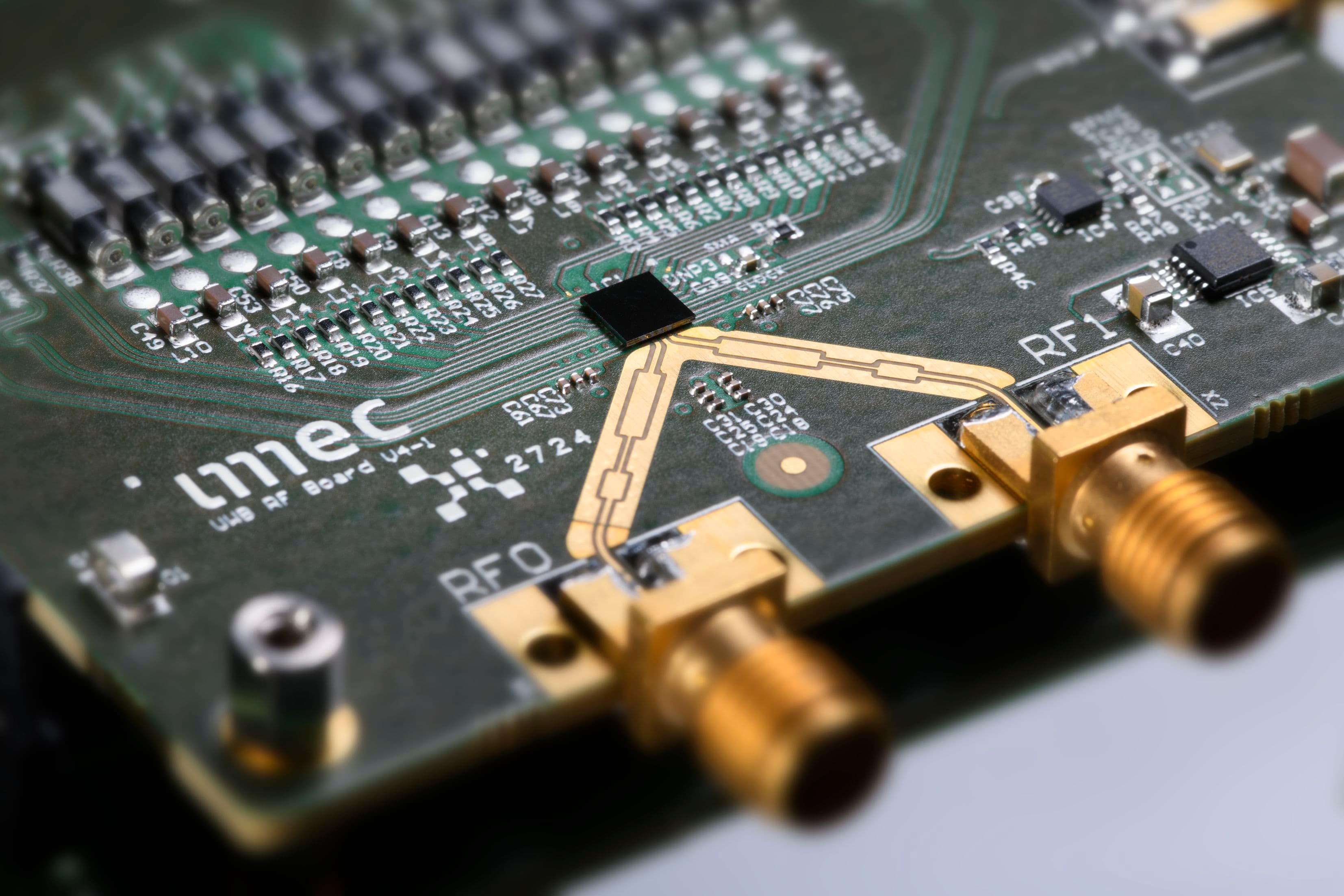

On the actuator side, imec is developing semi-transparent AM(O)LED displays, and haptic feedback solutions to address touch. On the sensor side, various solutions are being developed, including radar, lidar, sonar, imagers, EEG systems and chemical sensors. More specifically, in 2018, imec achieved breakthroughs in radar technology, and developed solutions for high-speed snapscan and shortwave infrared range hyperspectral imaging.

Imec also works on algorithms and software for sensor fusion, 3D scene mapping, object detection and machine learning. In 2018, a breakthrough was announced in eye-tracking technology, developed to enable high-quality AR/VR experiences.

Imec and Holst Centre have also proposed a prototype of an EEG headset that can measure emotions and cognitive processes in the brain. Besides, imec contributes with activities in high-bandwidth communication, neuromorphic IC development and energy management. Find more info on displays, image sensors and sensor fusion, wireless communication, radar systems and data science on imec’s website.

Want to know more?

- In this imec magazine article, you can learn more about imec’s recent achievements in radar technology.

- Read our imec magazine articles on advanced research in organic semiconductors and innovations in OLED displays.

- ‘Bringing artificial intelligence to the edge of the IoT’ – read the imec magazine article.

- Watch the video of the imec.icon project ARIA, where augmented reality based systems have been developed for industrial maintenance procedures.

- Read the vision on telepresence by Xavier Rottenberg in this edition of imec magazine.

This article is part of a special edition of imec magazine. To celebrate imec's 35th anniversary, we try to envisage how technology will have transformed our society in 2035.

Soeren Steudel is principal member of technical staff at imec, where he is leading the display activities. He has been working for more than 15 years at imec on different types of thin film semiconductor for various applications like displays, x-ray imager or neuromorphic computing. Soeren has received a PhD in electrical engineering from KU Leuven in 2007 and an MSc from the University of Technology Dresden in 2002. For his work on high-frequency organic diodes for organic radio frequency identification tags, he received in 2006 the Scientific American 50 award for outstanding technical achievements. Prior to his PhD he worked for Applied Materials in Santa Clara.

Published on:

20 December 2018