The chain is as strong as its weakest link. In vision systems, image processors have evolved at such rapid pace that lens technologies have not been able to catch up. As in: it is not always possible to produce high-end lenses within the cost- and other requirements for certain applications. That’s why researcher Ljubomir Jovanov and his colleagues at IPI – imec’s Image Processing and Interpretation research group at the University of Ghent (UGent) – have now developed a dedicated software solution to compensate for unwanted artifacts and color aberrations which result from this quality gap. With their software, they initially target the markets of security cameras and high-end broadcasting cameras.

Downsides of the race for more and smaller pixels

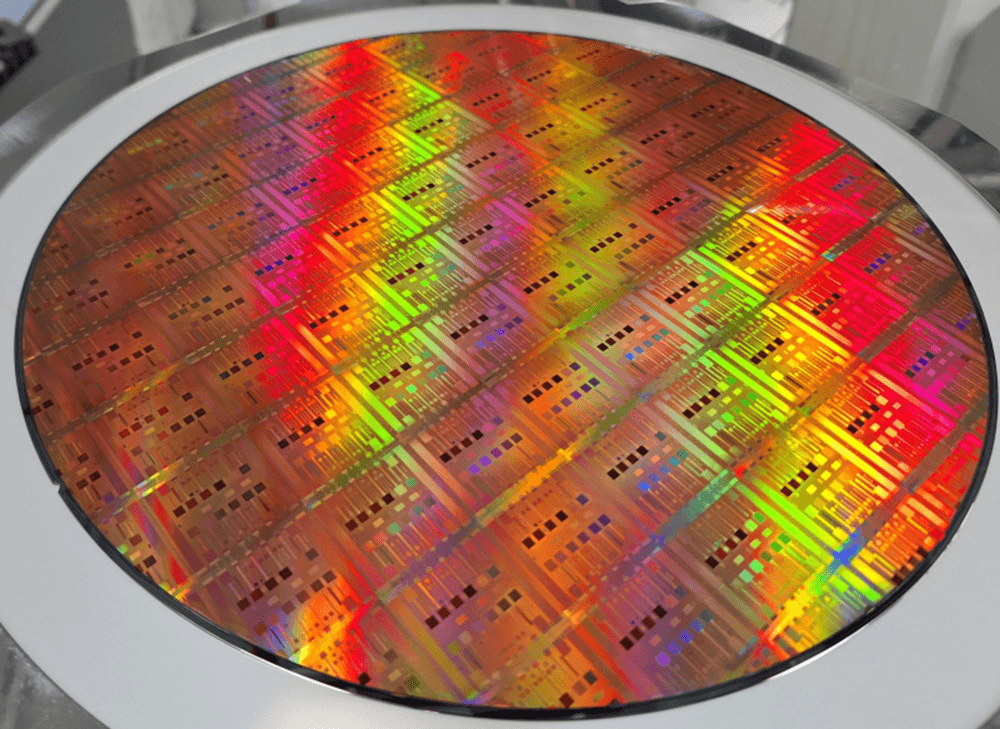

We are witnessing dramatic increase of the resolution of imaging sensors in the last few decades. While only fifteen years ago typical consumer grade camera had a resolution of 2 Megapixels, current camera models on the market easily reach 25 Megapixel resolution for static images. A similar trend is visible for video cameras, which now move to 33 Megapixel resolution. While high resolution is a very desirable characteristic of the camera, it brings a number of challenges for camera constructors. Some of the challenges brought by the increased resolution, like higher memory and processing power requirements, can be solved by using more recent and powerful hardware.

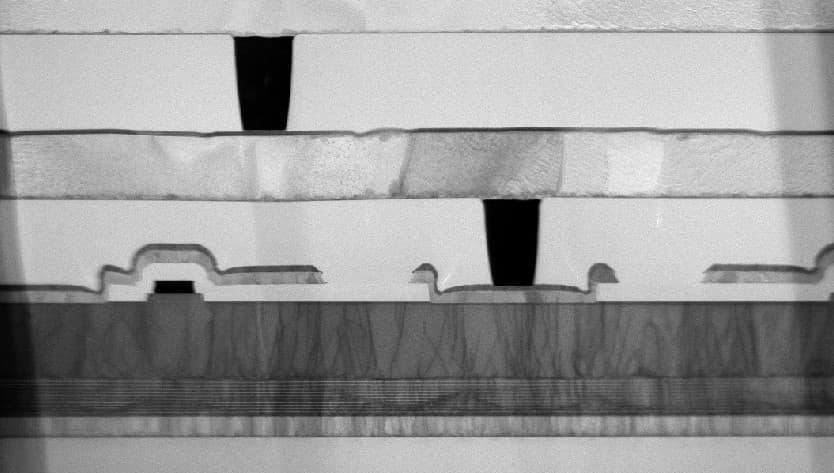

However, due to the further reduction of the pixel size, lens imperfections become more visible. Due to the lateral chromatic aberration, color components are not reaching the same pixel location, causing color artifacts in the affected regions.

Left: Lateral chromatic aberration is an effect whereby color components are not reaching the same focal location and – in the case of digital imagers – cause color artifacts in the affected regions. Right (picture taken from Wikipedia): color filter arrays separate incoming light into its color components, which is the common way to enable digital color capture.

Moreover, most cameras manufactured today use one imaging sensor employing color filter arrays, like the RGB-based Bayer pattern. Such filters separate the incoming light in its color components and are the only way to enable post-correction of chromatic aberration. As a result, demosaicing becomes a necessary step to reconstruct a homogeneous color in the final image. In this process, chromatic aberration shifts the location of color components and makes the artifacts even more pronounced, especially at the edges of the image or screen.

Software comes in where lenses cannot be optimized

While chromatic aberration can be diminished by careful lens design, such solution is not always possible. Lenses that would meet the quality requirements may become highly expensive and too large to fit the specified physical camera format.

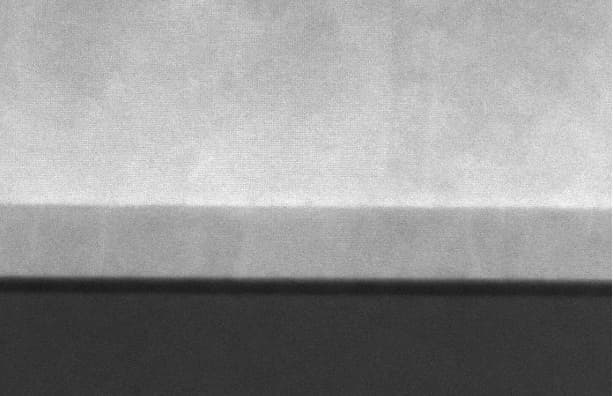

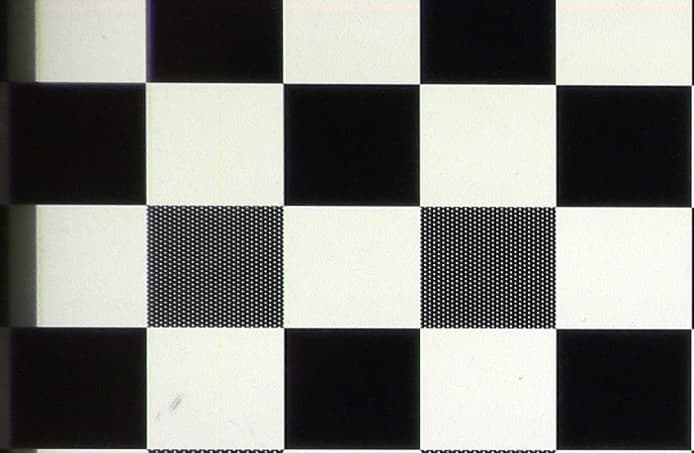

Top: Raw image without software correction. The more to the left edge of the image, the more pronounced the color artifacts. Bottom: Image after software-based correction, eliminating all artifacts.

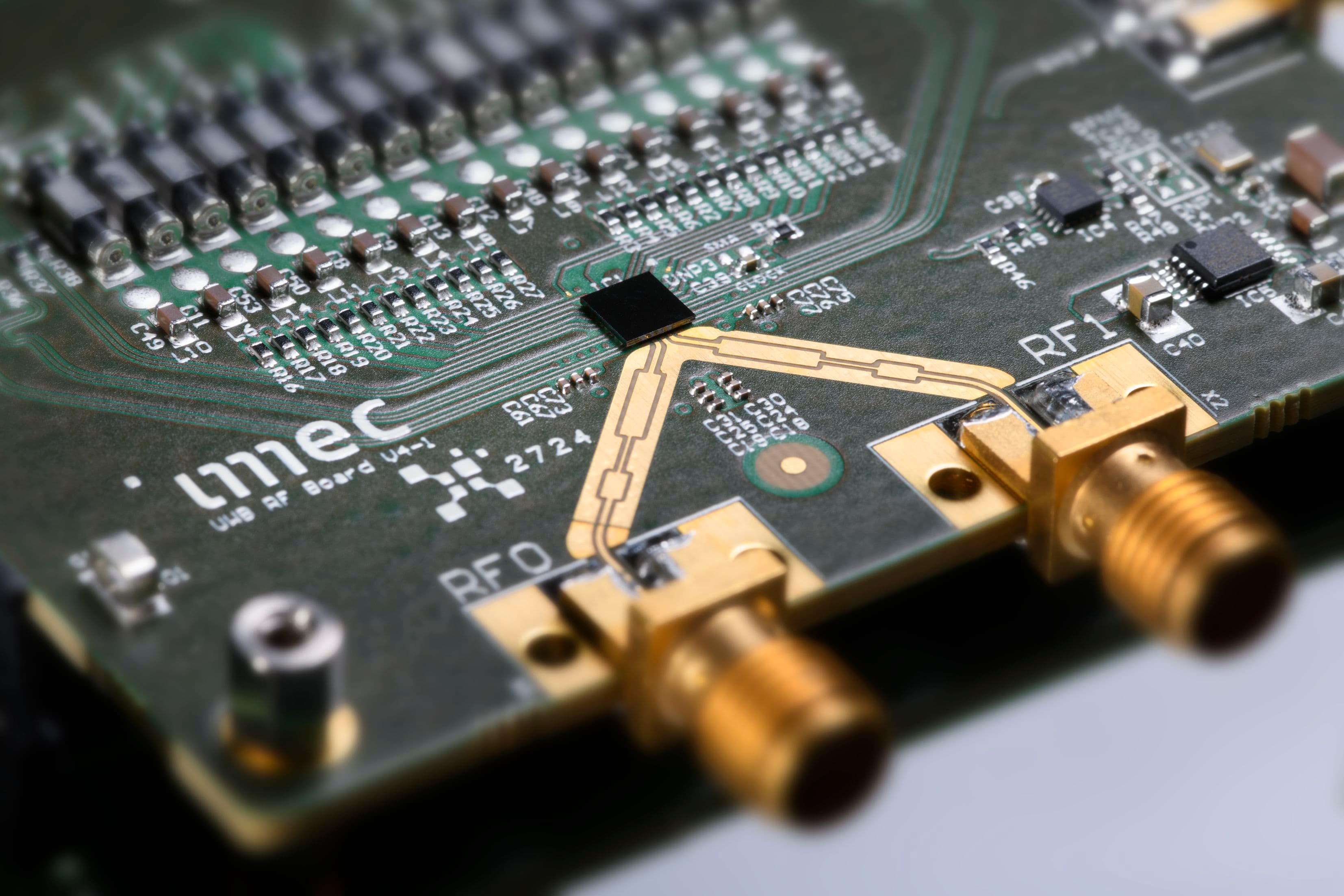

That’s why IPI, an imec research group at UGent, has now developed integrated video enhancement solution that deals with a multitude of unwanted image distortions in one go. The computational imaging-based method simultaneously performs noise reduction, chromatic aberration correction and demosaicing of the color components. Performing individual processing steps for correction of the mentioned artifacts would have been easier to program, but would lead to much too slow processing.

In-line correction and highly flexible towards new settings

So far, lens distortion correction algorithms were only available for offline processing, for example in a software like Photoshop. The imec solution performs these corrections in real time. As an additional improvement, the algorithm is implemented on a PC platform with a GPU. It is capable of processing UltraHD 4k video stream at 30 fps. When the lens zooms in or out, the software adapts its processing parameters in order to compensate for the modified distortion characteristics.

Typically, video enhancement algorithms for broadcast cameras are implemented in FPGA (Field Programmable Gate Array), which makes the solution difficult to adapt to a new type of lens, imaging sensor or a new FPGA chip.

The GPU-based solution is highly flexible and allows easier modification of the parameters of the algorithm, better portability to new hardware and easier adaptation to a new lens, higher resolution etc.

The performance improvement was made possible by using the in-house developed Quasar platform, a development environment that optimizes algorithms for heterogeneous systems.

Demonstrated in real-life setting

A large part of the development was performed in the framework of the EU-funded EXIST program, which ran from 2015 to end 2018. And it has been demonstrated in the application areas of the industrial partners, Grass Valley and Adimec. Grass Valley is a global player in content and media technology. Adimec makes reliable industrial cameras for demanding applications at global OEMs in Machine Vision, Healthcare and Global Security industries. Imec welcomes camera manufacturers, security companies and professionals involved in still or moving image correction to investigate technology transfer in to their solutions.

Want to know more?

- Conference paper on the subject of joint denoising, demosaicing, and chromatic aberration correction for UHD video.

- Imec magazine article: ‘Image sensor combining the best of different worlds’, highlighting breakthroughs in image sensors imec also realized during the EU EXIST project.

- Imec magazine article: ‘Real-time SDR to HDR video conversion’: another software solution by IPI, imec’s Image Processing and Interpretation research group at the University of Ghent.

- Homepage of Quasar, the platform developed by IPI for fast development of GPU code

About Ljubomir Jovanov

Ljubomir Jovanov is researcher at IPI, imec’s Image Processing and Interpretation research group at the University of Ghent. He was born in Pančevo, Yugoslavia, on July 13, 1975. He received dipl.ing. degree in Electrical Engineering and mr. ing. degree in Telecommunications, in 2000 and 2005 respectively, both from the University of Novi Sad, Serbia. In 2006 he joined the Department of Telecommunications and Information Processing of Ghent University, Belgium as a PhD student. His main research interests are image, video and 3D restoration, image analysis and processing of multimedia data. In 2011 he defended his PhD thesis entitled "Advanced Restoration Techniques for 3D Images and Video".

Published on:

2 April 2019