Computer scientists have always been fascinated by the human brain. After 50 years of relentless scaling, they have finally succeeded in building supercomputers that approach its computing power. But whereas our thinking organs keep happily humming on a mere 20W, their electronic counterparts have to burn through megawatts to arrive at the same results. Therefore, beginning in the 80ies, research groups have started looking at ways to build hardware that more resembles our brain. Circuits that use orders of magnitude less energy, that are fault-tolerant, and that become smarter over time, to name some of the most important characteristics of our bio-circuitry.

Of these groups, some have looked to build circuitry that exactly mimics the functioning of our brain cells and their tight interconnections. Others have been designing hardware that has the desirable characteristics of brain computing, but that does not necessarily work in the same way. Their goal is to build super-efficient processors for e.g. deep learning, image recognition or robotics. That is also imec’s take. As part of its efforts to scale future computing beyond what is possible with silicon CMOS, the R&D center has identified a number of challenges where its expertise may be crucial to design efficient brain-like chips. Francky Catthoor and Diederik Verkest, two scientists immersed in the field, talked with imec magazine about imec’s neuromorphic computing R&D.

A dense network of switches

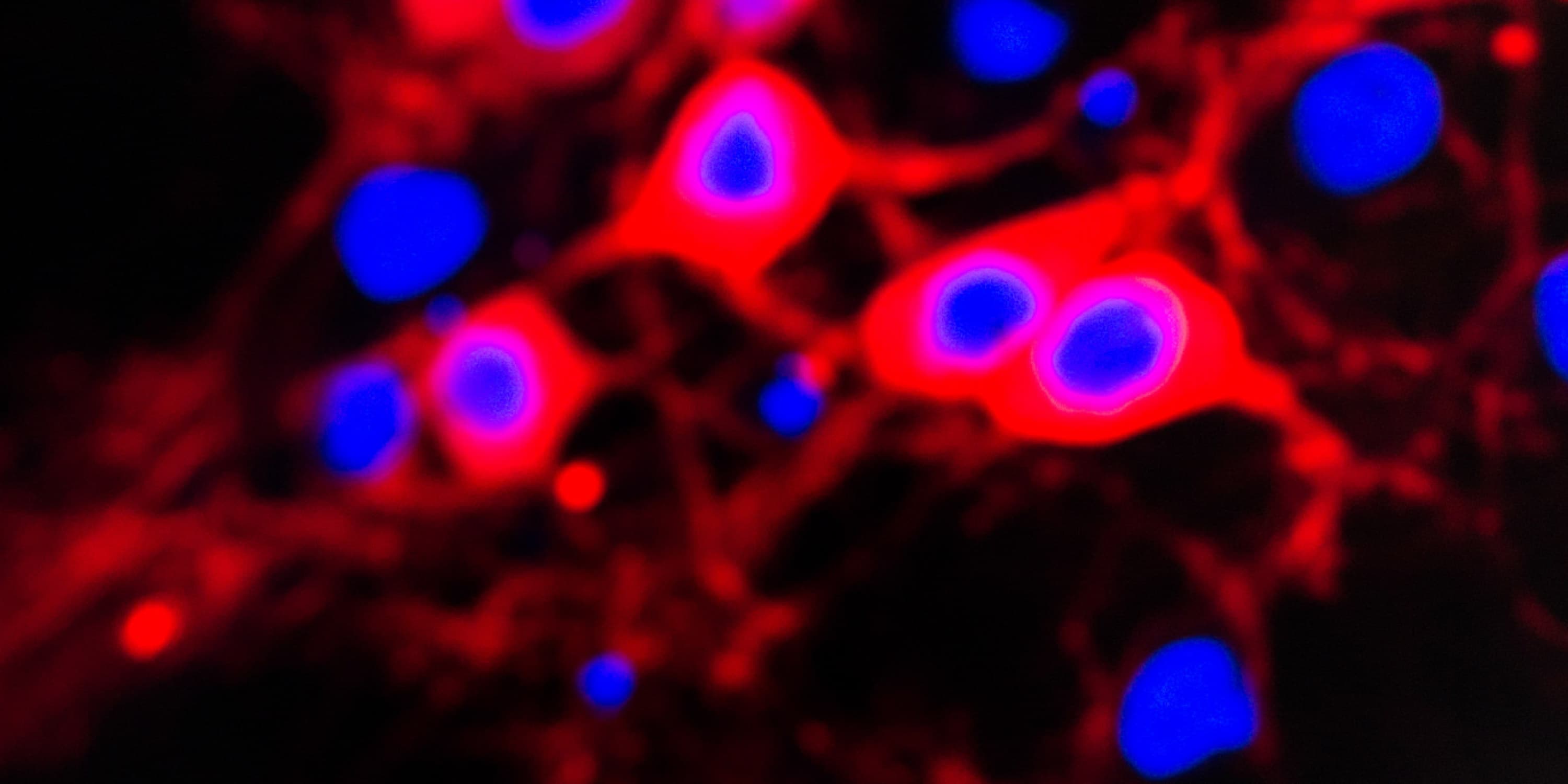

In conventional computers, memory and processing are separated, and a lot of the energy that computers consume is spent on transporting data from and to memory. Not so in the brain, where memory and processing are co-localized, distributed among an enormous number of relatively primitive processing nodes (the neurons) and their interconnections (the synapses). This architecture goes a long way to explain the brain’s energy efficiency, and it has therefore become the model for neuromorphic computing.

“The human brain has about 1011 neurons,” says Francky Catthoor. “If these would all be mutually interconnected, this would result in 1022 synapses, an unimaginable number for which there simply is no physical space. So the brain compromises, combining tight local interconnections with gradually more sparse long-distance interconnections between groups and clusters of neurons, resulting in a still huge 1015 synapses.”

One of the major challenges in neuromorphic computing has been to mimic this tight interconnection scheme in hardware. A particular issue is that the brain’s connections dynamically change over time, with new synapses growing and old ones getting pruned. Connections on a silicon chip, in contrast, are static once they are processed.

Diederik Verkest: “The synapses are usually implemented with some type of crossbar architecture. That is a collection of conducting wires laid out in a matrix (or cube), so that each input line connects with all outgoing lines. At a crossing of two lines, there is a switch that allows to pass or stop current from flowing from one node (neuron) to the other. In a neuromorphic chip, these switches represent the synapses. They contain the intelligence of the system, the ability to hold data, process and learn from experience. So they should be made programmable and self-adaptable.”

Because building these crossbars is so complex, the solution preferred by most researchers has been to keep the computation local to a restricted group of neurons, say a few hundred or thousand, avoiding all longer distance connections. But even with an array of 100x100 neurons, implementing a classic crossbar for all the connections gets already quite complex.

Scaling revisited: from a few hundred neurons to a brain

“Such smaller but fully-connected designs are able to compute through some pretty tough problems, but they cannot run the most advanced algorithms needed to solve e.g. deep learning problems,” says Catthoor. “And most important, it’s not at all easy to scale them to many thousands of neurons, let alone to the level of neurons and synapses in our brain.”

One solution is to use the small, densely interconnected brain chips as building blocks for larger structures. Between these building blocks, scientists then implement some level of global interconnections, e.g. by connecting individual chips through a shared high-speed data bus or through a network-on-chip (NoC) approach. The result is a hierarchical connection topology, with sparse global connections and dense local connections.

Francky Catthoor: “A promising architecture is the multistage network-on-chip, with which the group of Giacomo Indiveri at ETH Zurich has made a lot of progress. Such multistage NoCs were originally designed to efficiently run parallel tasks on a multicore chip. They are reasonably scalable and flexible, but they still need too much energy for our purposes. Therefore we are looking at yet another architecture: the fully-dynamic hierarchical segmented bus network. We are convinced that it is uniquely suited to tackle this problem, so we’ve taken out a patent and are now building a proof-of-concept. A first test we’ve set ourselves is to run a neuromorphic application simulator of UC Irvine (USA) that mimics the computation of a million neurons.”

Work on this emerging domain at imec started some two years ago and included people like Srinjoy Mitra, Rudy Lauwereins, Praveen Raghavan and Siebren Schaafsma. Today, part of imec’s efforts in neuromorphic computing are embedded in the EC Horizon2020 project NeuRAM3. The project’s goal is to implement novel hardware capable of processing realistic problems at ultralow power. Imec’s contribution is to make an overall architecture that is scalable, highly flexible, and uses very little energy. As part of the project imec will also build a first hardware prototype, using thin-film transistor technology developed in-house by Soeren Steudel’s team. The project’s other technology partners, CEA-Leti and ST Microelectronics will implement the design of a 100x100 array of locally interconnected neurons and fabricate a dedicated chip in 28nm technology.

The intelligent hardware synapse

Each neuron in the brain has a number of incoming connections and one outgoing. The incoming synapses receive signals, which are integrated (summated) in the neuron. Subsequently, when a threshold is reached, the outgoing synapse fires. But here’s the thing: not all synapses contribute equally. Each has a specific weight that determines its contribution to the neuron’s firing. And these weights will change over time, as a result of all the preceding signals that pass through the synapse. That’s how the brain learns.

“Of course it would be possible to mimic this behavior digitally by storing a number for each incoming connection of a neuron,” says Diederik Verkest. “But then we would have to call upon a separate storage and use way too much transistors and energy in comparison to the brain.”

So what imec is looking for is a new component to implement this synaptic behavior. A new component for the crossbar switches, with analogue behavior that changes its resistance and passes current depending on its previous experience.

Diederik Verkest: “A close analogue is the RRAM-cell (resistive random-access memory). This is a type of nonvolatile memory that we have been working on as a replacement for the Flash technology. It works by changing the resistance across a specific dielectric solid-state material, a memristor, by applying an electric field. If we push current through a RRAM cell hard and fast, it becomes a digital device; but if we run current through it gently and slowly it behaves like an analog device.”

In imec’s RRAM research, the research center has been looking to optimize RRAM memory cells for digital behavior. But with all experience that the researchers gained, they can also go and optimize for a cell that changes its resistance in an analogue way depending on the history of currents that were applied previously.

The application sweetspot

Francky Catthoor: “Of course, any computational problem can also be solved on a digital computer. And with the upcoming exascale computers, energy-hungry as they are, we’re ready to tackle deep learning in a big way. But at the same time, the IoT community is looking to build sensors, machinery and robots that have as much intelligence as possible, but that may run on a battery or on energy harvested from the sun. So there certainly is a big incentive to design highly-efficient brain-like hardware.”

These brain-on-chips may not be exact copies of our brain circuits, but nature teaches us that it is physically possible to build much better computers than we do today. Computers that we urgently need for the intelligent sensors and robots of the connected world. Small, low-power, long-lasting devices that have to stand their ground among an ever growing stream of data, continuously adapt themselves to their environment, even learn and become smarter over their life time.

Published on:

21 December 2016