This article is the first in a series of articles focusing on how semiconductor technologies can revolutionize proteomics.

Want to stay informed about this topic?

Twenty years ago, the monumental Human Genome Project was completed: after thirteen years and concerted efforts from hundreds of researchers across the globe, the entire human genome was finally sequenced.

A lot has changed since then. While sequencing one genome cost about 100 million dollars at the time, we can now do the same for just over one hundred dollars. A massive cost reduction, which has enabled genome sequencing to become a powerful tool in the clinic.

From liquid biopsies to molecular tumor characterization or genetic diagnosis of rare and unknown diseases, next-generation sequencing is paving the way for truly personalized medicine, with treatment options and prognostics tailored to each patient’s unique genetic makeup.

A deeper level of understanding

Often referred to as a ‘blueprint’, our DNA serves as the fundamental code directing the biological processes in all our cells. This genetic code is transcribed into RNA molecules, which act as messengers to translate genetic instructions into functional proteins.

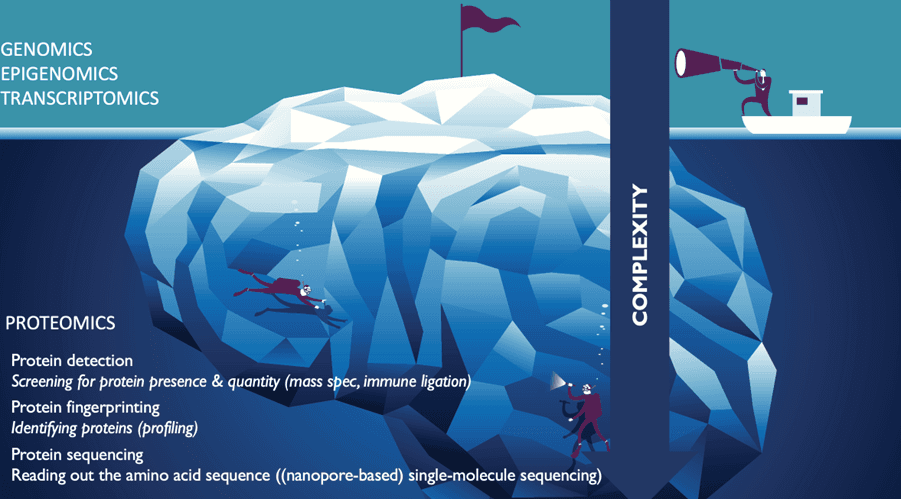

“We’ve arrived in a reality where we can digitize both the genome and the transcriptome, making it much easier to hunt down signals of health and disease,” says Peter Peumans, imec’s CTO Health.

“The next logical step would be to digitize the proteome, as proteins ultimately shape the complexity of living organisms, playing diverse and essential roles in virtually every aspect of cellular structure, function and regulation.”

Indeed, despite the power of genomic or transcriptomic profiling, in the end they only provide indirect measurements of the practical reality within biological cells. The proteome tells a much more complete story.

“Scientists are digging deeper and deeper into the proteome nowadays,” say Kris Gevaert and Simon Devos, both proteomics experts affiliated to VIB.

Gevaert is Group Leader and Professor at the University of Ghent. His team is interested in applying new proteomics technologies in life sciences. Devos heads VIB’s Proteomics Core, an expertise hub and service facility for the local and international life science community. They clearly experience a steep increase in scientists' interest in emerging proteomic approaches.

“When it comes to proteomics technology, we’ve seen massive improvements in the last decade.” Devos says. Still, to digitize the proteome, as Peumans envisions, we will first need to tackle some major hurdles.

"There’s a simple reason why we’re ahead with genomics versus proteomics, and that’s the much larger complexity of the proteome.”

Far more complex than DNA

It is not a coincidence that researchers set out on a mission like the Human Genome Project long before they even started thinking about doing the same for the proteome. “There’s a simple reason why we’re ahead with genomics versus proteomics,” says Peumans, “and that’s the much larger complexity of the proteome.”

It starts with the basic makeup of both biomolecules. While DNA and RNA are polymers consisting of just four building blocks, proteins are made up of a unique combination of 20 different amino acids.

To make matters even more challenging, most amino acids can undergo a wide range of post-translational modifications, including methylation, phosphorylation, ubiquitination and glycosylation, all of which alter both the protein’s structure and its function.

From a technical perspective as well, analyzing proteins is far more challenging than analyzing DNA or RNA.

First of all, there is a very large dynamic range between highly abundant proteins and those with a very low molecular concentration. There is also a lot of variability in the biophysical behavior of different proteins—some are highly soluble, or perhaps prone to degradation, others not at all.

Secondly, there is no straightforward way to amplify proteins like we are able to do for genes using PCR. “That means that, by definition, you need to be able to detect single molecules if you are to map the entire proteome,” explains Peumans.

“All these reasons combined lead to the fact that measuring the proteome implies a very high throughput system,” says Peumans. “About a 1,000 times higher than what we currently achieve with next-generation sequencing.”

Yet, dozens of innovative companies are pushing new approaches to detect, profile and sequence proteins en masse, ushering in a new era of proteomics research. With these emerging technologies, it looks like today, we can finally start dreaming about ways to overcome the obstacles that have long hindered us to grasp the full complexity of the proteome and its implications for health and disease.

Stay informed about how semiconductor technologies revolutionize proteomics

Mass spectrometry: the gold standard, still improving

Mass spectrometry has been the gold standard in protein research. Mass spectrometers identify a molecule by reading the mass-to-charge ratio of its ion. After ionizing protein molecules, fragmenting them into smaller peptides and measuring their mass-to-charge ratio, the resulting mass spectra are matched against protein sequence databases to determine the identity of the proteins present in a sample.

“We basically cut up proteins into smaller pieces and analyze those,” explains Gevaert. “We call it bottom-up proteomics. We can easily detect 8,000 or 9,000 different proteins within one sample .”

Over the past years, mass-spec-based proteomics has made huge strides, especially when it comes to sensitivity and speed. “Thermo Fisher’s Orbitrap Astral and Bruker’s timsTOF Ultra can measure 4,000 to 8,000 proteins from minute sample amounts in under 20 minutes,” says Devos. “Both instruments have been very successful and relevant innovations in the field, allowing us to go after smaller and smaller sample sizes, and to analyze the protein composition in single cells or even single organelles.”

Besides the innovations in terms of machinery, there has been enormous progress in the way researchers use the equipment, says Devos: “Today, we focus much more on data-independent analysis: we systematically acquire mass spectrometry data without relying on predefined assumptions or hypotheses, enabling comprehensive and unbiased interrogation of the proteome.”

Researchers also leverage machine learning algorithms and computational techniques to process vast amounts of proteomic data, extract meaningful insights, and identify patterns that might otherwise go unnoticed.

“Machine learning is changing the way we approach mass spectrometry. For example, researchers now often use AI to predict a fragmentation mass spectrum of a specific protein or peptide and run the analyses to check if the results match. This allows them to anticipate and validate results with unprecedented accuracy and efficiency.”

Immuno-ligation: increasingly powerful and specific

Immuno-ligation proteomic methods are another, more mature approach to protein analysis. These high-throughput, multiplex immunoassays, often referred to as massively parallel ELISAs, enable the simultaneous detection and quantification of multiple proteins within a single sample.

In its essence, immuno-ligation relies on antibodies or aptamers (single-stranded DNA or RNA molecules) that specifically bind to target proteins, allowing researchers to identify and measure the abundance of these proteins with high sensitivity and specificity.

Companies like Alamar Biosciences, Somalogic and Olink are developing innovative platforms that offer increasingly accurate and comprehensive protein profiling for smaller and smaller samples.

With a focus on developing antibodies and aptamers specifically targeted towards human proteins, these techniques are particularly well-suited for clinical applications, offering a streamlined approach to biomarker discovery, disease diagnosis, and monitoring treatment responses.

"The challenge with most of these approaches lies in making them massively parallel."

Protein fingerprinting: promising profiling techniques

Unlike bulk assays that provide measurements to detect the presence or absence of certain proteins within a sample—like mass spec and immuno-ligation assays allow you to do—single molecule profiling or fingerprinting techniques enable the direct measurement and characterization of individual proteins in real time.

By interrogating proteins one by one, researchers hope to gain unprecedented insights into their dynamics.

“The challenge with most of these approaches lies in making them massively parallel,” says Gevaert. “When you break up a cell, what you have is a bunch of proteins with a gigantic diversity in terms of concentration. Histones will be most abundant, but some other proteins will be present at 10-million-fold smaller concentrations.”

Erisyon's technology platform relies on immobilizing peptides on a chip and degrading them step by step to be able to identify a protein. The company does so based on the contribution of four key amino acids to a protein’s composition, which they assert is sufficient for protein identification.

Players like Nautilus take a novel approach to identify and quantify single proteins in any sample using a unique ‘hyper-dense single molecule protein nanoarray’: a flow cell with 10 billion(!) landing pads where single protein molecules can be anchored while highly specific antibodies and reagents pass through.

Devos: “This is a company that is up and rising. Their approach is to profile single proteins, not by sequencing them, but by recognizing unique molecular signatures. In theory, their approach allows for huge throughput and multiple runs per sample, but, as with Erisyon, the verdict is still out on the power of the technology, especially when it comes to recognizing post-translational modifications or different protein isoforms.”

Single-molecule sequencing: the holy grail, but still a moonshot?

Reading out the exact amino acid sequence of each protein one by one, would in theory be the most straightforward way to map the proteome.

One approach to do so is based on nanopore technology, where proteins are threaded through nanoscale pores, which allow amino acids to be read out directly as they pass through the hole.

Alternatively, non-nanopore-based methods employ other innovative techniques, like single-molecule fluorescence in the case of Quantum-Si or reverse translation in the case of Encodia, to elucidate protein sequences.

Similar to Erisyon, Encodia’s technology platform relies on immobilizing peptides on a chip and degrading them step by step. However, in this case, the aim is essentially to translate proteins back into a DNA code that can be read out by next-generation genetic sequencing.

The company was founded by the scientists behind Illumina, with the aim of making Encodia a transformative force in the proteomics field, similar to how Illumina revolutionized genomics, by democratizing access to proteomics.

Quantum-Si's sequencing platform leverages a combination of semiconductor tech and biochemistry to directly sequence individual proteins.

Proteins are first digested into shorter peptides that are immobilized onto the surface of a chip, where they are attached to a well with reagents that label the terminal amino acid with different fluorescent tags. The unique fluorescent signal is read out step by step as the terminal amino acids are removed one by one and the process repeats until the whole peptide is sequenced.

Similar to Erisyon and Encodia’s degradation approach, Quantum-Si's sequencing technology is based on the disassembly of peptides – protein fragments in other words – into individual amino acids.

Having a way to sequence individual proteins from start to finish instead, and including modifications, would truly be a game changer, says Gevaert: “If we could do this at the level of single cells, for example with nanopore sequencing, it would mean we finally get a comprehensive view of cellular heterogeneity beyond what RNA sequencing can tell us.

Devos agrees that sequencing intact proteins would be very powerful and valuable but adds that it represents significant challenges. “Peptides are much easier to manipulate; it is more straightforward to bring them into solution, to separate them on a column, or to immobilize them on a surface. Especially when you consider more complicated proteins, like membrane proteins for example, the challenge of handling them as single intact molecules is considerable.”

With nanopore sequencing, proteins are guided through nanoscale pores. As the protein molecules pass through, changes in electrical signals unique to the specific amino acid traversing the pore allow for a real-time read out of the protein sequence.

One open question is whether it would be better to opt for a pure solid-state approach to build nanopores, in a silicon nitride membrane for example, or to employ biological pores. In the latter case, solid-state pores would essentially act as placeholders for smaller biological pores.

“No two solid-state pores are the same,” says Peumans. “A biological pore is atomically perfect, and you can make it much smaller too. A solid-state pore is not going to work as well as a biological one, but it has the benefit of potentially achieving a higher throughput and offering a simple workflow. Biological pores, on the other hand, present questions concerning how far chemistry can be pushed and the challenge of preventing pore degradation while unraveling proteins.”

This ongoing debate underscores the complexities and trade-offs inherent to nanopore proteomics technology, which is still in full development. Innovative solutions will have to balance structural integrity, functional performance, and longevity.

Israeli startup Genopore is working on a low-cost, semiconductor-based protein detector using Opti-Pore, an optical nanopore sensor technology developed by one of its co-founders.

Gevaert has his reservations, at least for now: “I think it is fantastic that we see the field move ahead in this direction, but I don’t see yet how nanopore sequencing will be used to analyze an entire proteome like we do now with mass spec. Certain protein modifications might be relatively easy to identify, such as ubiquitin or glycosylation, which create large molecular trees that branch out form the protein. Mapping out the heterogeneity created by smaller modification will pose a bigger challenge.”

He immediately adds though that everything depends on the question and the type of modification. “There are disease states characterized by aberrant splicing, like one specific type of dementia we are currently researching,” he says. “Interestingly, we don't detect these products of aberrant splicing in the plasma with mass spec. It's possible they could be more easily identified through sequencing.”

“There is no doubt we will be facing a huge pile of proteomic data in the near future. The question is: what are we going to learn from it?”

Turning data into insights

As new technologies are vetted and refined, proteome readouts will increase exponentially. Thermo stated it expects to be able to read out a vast number of proteomes on its new mass spec devices. “There is no doubt we will be facing a huge pile of proteomic data in the near future,” says Gevaert. “The question is: what are we going to learn from it?”

Which method will prove most useful and reliable? The answer will most likely depend on what users are trying to achieve. One thing is for sure: mass-spec-based proteomics is here to stay. It is an inherently unbiased method, which in principle allows you to measure everything that comes out of the column. It remains extremely powerful, especially in the context of exploratory research.

“Its biggest advantage is its sensitivity to discern modified proteins and peptides. When it comes to routine medical applications, however, almost no one is looking at mass spec, as it is after all still quite expensive.”

First in biopharma and for fast diagnostics

The experts agree that emerging targeted approaches would be particularly interesting for clinical settings.

Gevaert: “Targeted protein affinity technology is very interesting when making the translation towards the clinic. You don’t need to have a single biomarker but can deploy a panel of different indicators, based on affinity.”

New technologies like nanopore sequencing could in theory be a match for questions calling for high sensitivity, but it would require these methods to become a lot more powerful in distinguishing the proteomic heterogeneity than wat is possible today.

Devos suggests that the biopharmaceutical industry could be the primary beneficiary. “The low-hanging fruit would be for such companies to use sequencing approaches to efficiently screen or quality-control their products. This would entail analyzing a single purified protein or variations of the same protein – which would be more feasible and realistic as a first step, rather than tackling complex proteomes right away.”

Brainstorms are already ongoing on how to integrate protein sensors into fermenters to profile and sequence products in real time as they are produced – a concept that holds significant promise.

Another big advantage of single-protein approaches is the fact that they allow you to count individual molecules, says Gevaert: “You will be able to determine the exact number of copies of the intact, the modified, and the processed version of a protein. While mass spec can reliably pick up modifications, you have no clue about the actual amount of protein molecules that is modified.”

One setting in which he believes this could be useful is, for example, interventions to the microbiome. If you could assess the abundance of each bacterial strain in your gut, you could measure how certain manipulations alter their levels. He agrees with Devos though that the first step would most likely be to measure biologicals.

Next up, will be diagnostic purposes, says Devos: “Targeted approaches will allow you to dive into the low-abundant proteome in plasma, a non-invasive and cheap approach.”

For use as an R&D tool, proteomics platforms don’t necessarily need to be read out in real time, and cost per amino acid will be a much more important determinant. There may be clinical applications though, where you want a fast answer.

“In acute situations a fast pipeline will be valuable,” says Peumans. “With proteomics this might be even more extreme than in the case of genomics. Sepsis would be an example of a clinical emergency where you want a real quick answer.”

"Input of and collaboration with end-users is essential."

Leveraging imec’s nano- and digital technologies

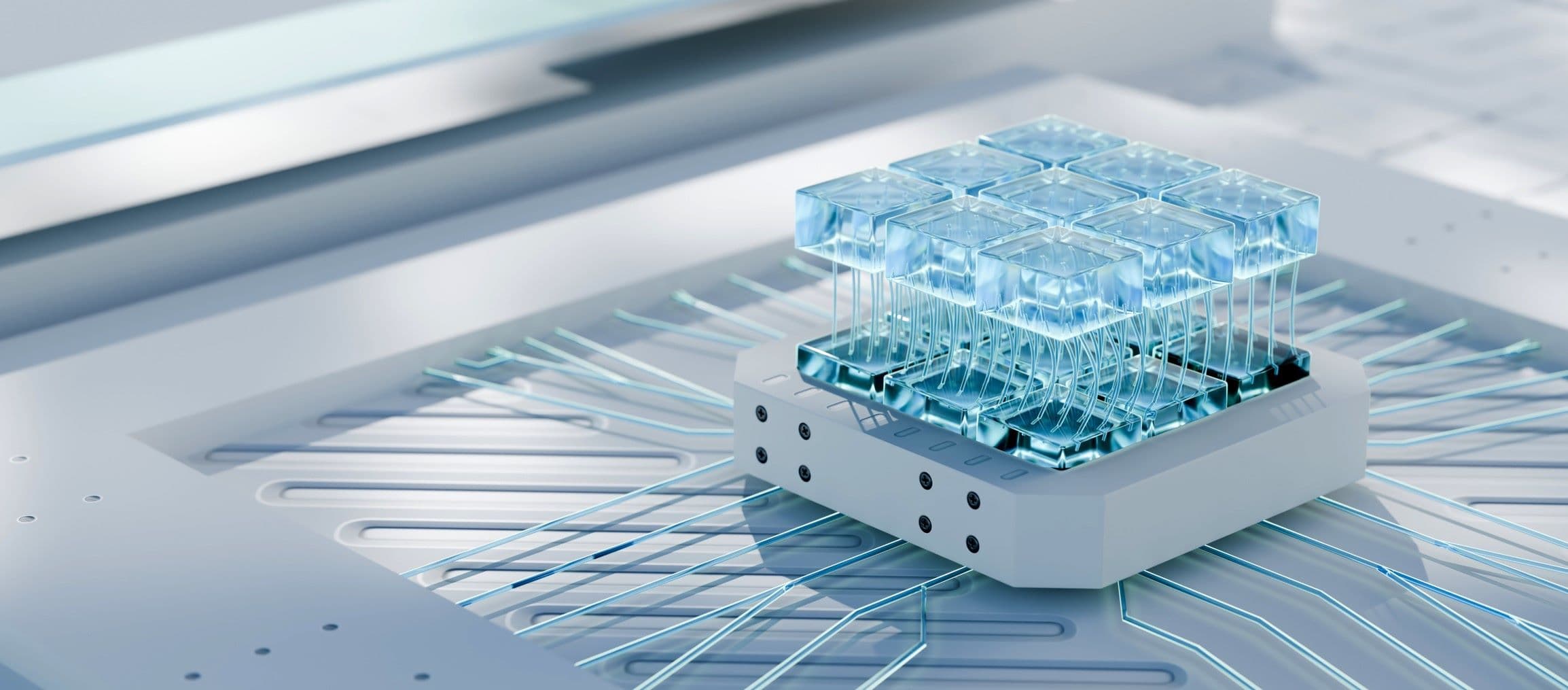

“Just as we did with genomics, we want to help accelerate the proteomics revolution,” states Peumans. “From integrated photonics to smart fluidics or nanopores, we believe that a lot of the building blocks and technologies we develop at imec can help improve emerging proteomic technologies.”

Such solutions will need to be increasingly reliable, high-throughput, and sensitive. They will need to be small and mass-manufacturable at the same time. This is where imec’s world-class expertise in nano- and digital technology can play a vital role.

But despite imec's unique know-how and infrastructure, Peumans acknowledges that they can’t move the needle on their own. “We have deep expertise at imec but, in the end, we only have a vague notion of what medical researchers and analysts exactly need,” he says. “As every design choice you make depends strongly on the actual use of a product or technology, input of and collaboration with end-users is essential.”

Imec’s goal is to work with ventures, mid- and large-scale companies in the proteomics field to help design, develop and manufacture creative solutions. With its strong track record in pre-competitive collaboration along the entire R&D pathway, it is uniquely positioned to catalyze innovation in this quickly evolving field.

“We believe that sequencing the entire proteome is becoming possible and that now the time has come to develop the technology to do so,” says Peumans. “It will undoubtedly take us a few years to get there, but this is the time to put all cards on the table.”

Dr. Devos is Manager of the VIB Proteomics Core. He obtained his MSc and PhD in Biochemistry from the University of Ghent in 2011 and 2015, respectively. After completing postdoctoral training at UGhent and VIB, focusing on Single Molecule Proteomics, Devos was appointed as head of the Proteomics Core at VIB in 2022. The Proteomics Core provides access to and expertise in a diverse set of leading-edge, mass spectrometry-driven proteomics technologies and has become a reference center for proteome analyses in Flanders and beyond.

Prof. dr. Gevaert obtained his PhD in Biotechnology in 2000 at Ghent University (Belgium). He was a post-doctoral fellow with the Fund for Scientific Research (Flanders, Belgium) until 2006 and was appointed Professor in Functional Proteomics at Ghent University in 2004. Since 2005 he heads the Functional Proteomics group of the VIB Department of Medical Protein Research and also the VIB Proteomics Expertise Centre (www.vib.be/pec). In 2010 he was appointed Full Professor at Ghent University and is currently Associate Director of the VIB Department of Medical Protein Research. The Gevaert lab focuses on the introduction of proteomic technologies to address challenging questions in life sciences. Gevaert and his team try to understand why proteins are N-terminally acetylated, study the function of N-terminal proteoforms, and develop technologies to identify cytolysis proteins as disease proxies.

Peter Peumans is CTO Health at imec, a world-leading R&D and innovation hub in nanoelectronics and digital technologies. Through the years, imec has leveraged its chip engineering expertise to build miniaturized systems for discovery, omics, and diagnostic applications. Peumans has a keen interest in exploiting the ability to integrate electronics, fluidics and photonics into systems-on-a-chip to address challenges in advanced therapy manufacturing.

Published on:

9 September 2024